Introduction

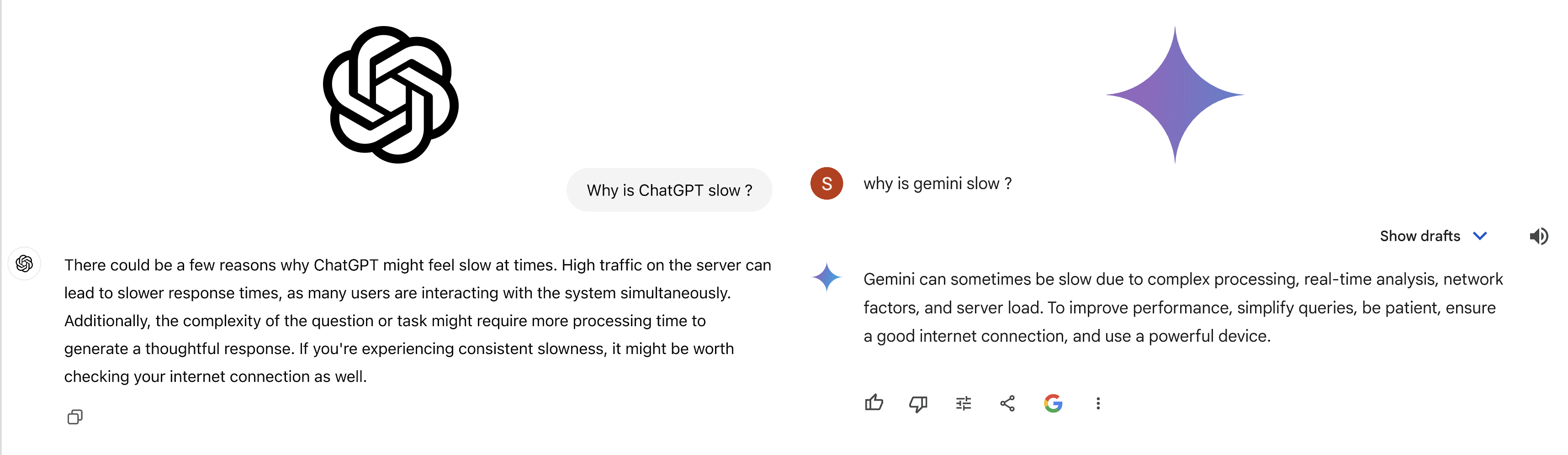

If you are a day-to-day user of AI chatbots like ChatGPT and Gemini, you might have encountered several instances where these efficient AI chatbots become sluggish in providing responses. Although there are multiple reasons behind this sluggishness, two common ones are increased demand and slow network connectivity. Sophisticated AI models, such as those from the GPT family, demand substantial compute power and energy, so nearly all AI products we use rely on cloud computation. Therefore, the speed of responses from AI models back to users depends on the load on computing processors and the users’ internet connectivity.

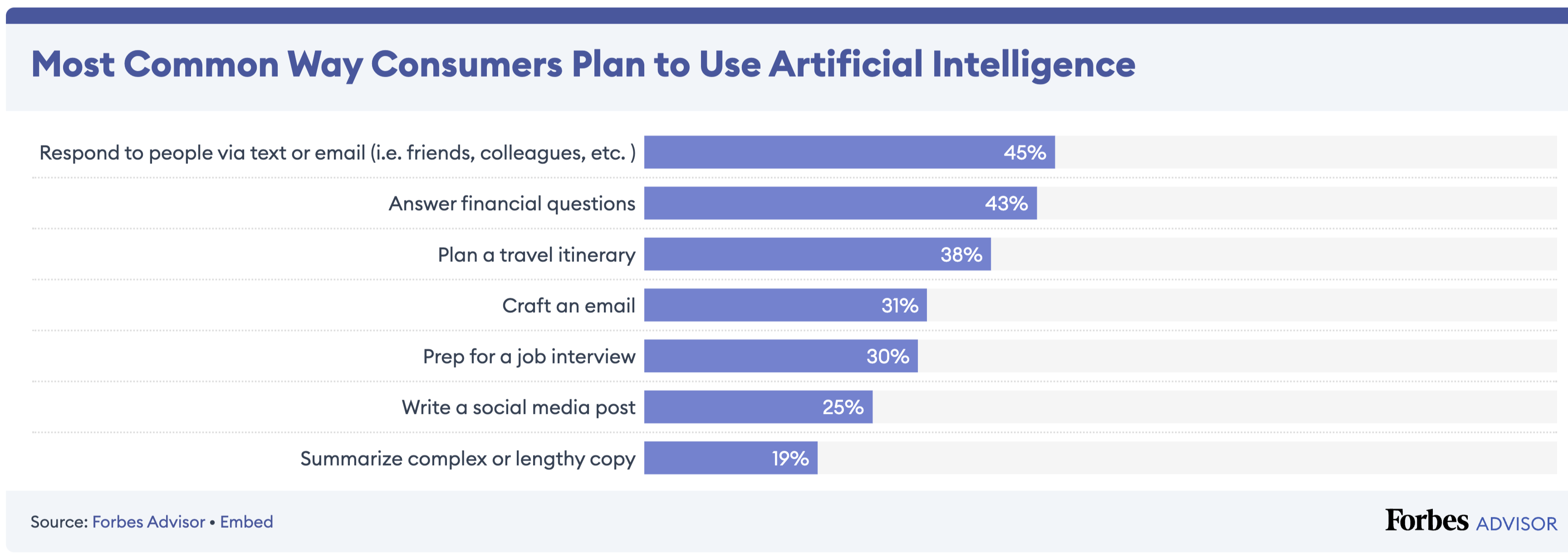

But do all tasks require such massive compute power and cloud processing? According to statistics, most common tasks people engage in with AI involve writing emails, replying to texts, composing social media posts, and grammar correction. These tasks do not necessitate huge compute power, and using highly sophisticated LLMs for such tasks might be overkill. This is where models like Gemini Nano come into play!

Gemini Nano, from the Gemini Family

Google’s Gemini family has four models: Nano, Flash, Pro, and Ultra, each capable of handling tasks of increasing complexity, as their names suggest. Gemini Nano is a large language model, but a miniature version of the full Gemini model designed to run on devices that have relatively less compute power, such as smartphones, tablets, and personal computers. With Gemini Nano on-device, users can perform AI-driven tasks with or without internet connectivity.

Capabilities of Nano

As humans can see, hear, and understand information, Gemini Nano is a multimodal LLM that can assist with:

Understanding images: Providing clear descriptions of images and objects within them.

Understanding voice: Transcribing spoken language into text.

Text summarization: Offering concise summaries of lengthy documents and texts.

Developers Can Leverage the Capabilities of Nano with Their Own Devices

Considering Android smartphones built with Gemini Nano, the capabilities of the LLM can be leveraged not only by platform-native apps such as Gboard, Recorder, and TalkBack (Android’s screen reader) but also by any application that can run on the Android platform (currently in the experimental stage). The AI core system service launched by Google manages and updates the model on devices without requiring any effort from users. Android developers can experiment with the capabilities of Nano without any additional subscription costs, other than the device cost. They can use the Google AI Edge SDK, which provides access to the Gemini Nano APIs for integrating AI capabilities into their apps.

For desktop apps, Google Chrome (the most widely used browser) has integrations with Gemini Nano. As Nano is built into the Chrome browser, end users need not worry about downloading or updating the Nano model. Chrome’s AI runtime optimizes the usage of available hardware on users’ devices for the best performance. Developers need to toggle a few settings in the browser to activate Nano and can utilize the browser APIs provided by Google (currently in early preview and experimental) to interact with Nano.

Nano Revolutionizing the Digital Realm!

Nano can assist in a wide range of use cases with its multimodality. Let’s see a few demonstrated by Google:

Call Notes: In supported Pixel phones, the Call Notes app leverages Nano to generate summaries of calls. The same summarization feature is available in the Pixel Recorder app.

Gboard: Google Keyboard can provide smart suggestions for instant messaging, utilizing Nano’s capabilities.

TalkBack: TalkBack, Android’s screen reader accessibility feature, can provide clear descriptions of images and objects in images powered by Nano.

When it comes to Chrome, some promising features include language detection and translation. Web apps can understand the user’s language, leveraging Nano to help personalize workflows according to region or language. Additionally, developers previously relied on translation files or APIs for localizing web apps, but with Nano’s capabilities, entire web apps may be localized effortlessly for end users.

Privacy Claim by Google

Google claims that the AI core service on the Android platform is PCC compliant and follows strict privacy rules. Since user prompts are processed locally, server calls are avoided. There are layers of protection applied while processing requests with Nano, ensuring that each input and output request to the model is isolated and evaluated against safety filters to prevent the leakage of sensitive data to apps.

Smartphones with Gemini Nano

Gemini Nano requires good compute power for Gen AI tasks. As of today, it is available on high-end smartphones equipped with sophisticated chips such as Google’s Tensor G4 and Qualcomm’s Snapdragon 8 Gen 3. Mobile chips will continue to evolve to meet the growing demands for on-device AI in the future for wider reach.

Pixel 9 series devices

Google Pixel 8 Series

Samsung S24 Series devices

Samsung Z Fold 6

Samsung Z Flip 6

Realme GT 6

Motorola Edge 50 Ultra

Motorola Razr 50 Ultra

Xiaomi 14T/Pro

Xiaomi MIX Flip

Conclusion

On-device AI is a new frontier with many features currently offered in experimental and early preview stages. Only a few consumers with access to high-end devices have experienced it. However, with more powerful chipsets and mobile devices emerging every year, on-device AI will become inevitable in the coming days, offering even more powerful features.