Towards a more Visible Enterprise – with Data Lineage and Ancestry

Visualizing data lifecycle helps business and IT clients better understand KEY applications, processes, and their dependencies. It provides a roadmap to data visualization and usage all through the data journey. Most importantly, it enables the ability to take back control of data (entities) and their footprint and deliver trusted data to aid empowered business decisions.

What is Data Lineage?

Data lineage is essentially the ancestry or provenance of an organization, sources, and applications. It provides an ongoing update on the origination of any data asset, its navigation, transformation, storage mechanism, and access history, along with other key metadata. Provenance and/or data lineage provides valuable data insights and helps organizations know where data originates, its journey(s), how it is/has been used, and by whom.

Data lineage answers, “Where is this data coming from and where is it going throughout the lifecycle?”

It traces the ancestry and interdependencies of the data entities in a catalogue and provides a roadmap for data, which in turn enables business users better understand and trust it.

Importance of Data Lineage

Data lineage provides ancestry visibility on how data traverses through the organization, sources, and applications. It traces multiple parameters like availability, ownership, sensitivity, and data quality.

Data lineage is essential for data governance, regulatory compliance, and data/cyber security. The viewability enabled by mapping and verifying the data accessibility is like a virtual magnifying glass – critical for data transparency and history. It depicts how and where data has been updated, its impact, and its usage all across the application pipeline. Data lineage helps increase data security, cyber governance, and audit compliance by providing organizations the visibility and transparency to identify potential risks, gaps, and flaws.

Finally, it helps proactively identify and fix data gaps for industry applications by ensuring policy coherence and consistency and controls are in place.

Data lineage gives users ‘peace of mind’ in data modernization transformation initiatives and migrations in both, on-premises and the cloud. It helps visualize data flows and objects and enables a deeper understanding to help predict the effect on downstream applications and processes.

It provides confidence about data with respect to the following:

- Data governance for regulatory and audit compliance and guidelines

- PII information in data sources and the appropriate approaches to develop personalized customer initiatives with appropriate safeguards and compliance, along with a ‘deep’ understanding of data distribution

- Identify data and metadata appropriate for migration to/from the cloud, and assess its impact on the sources/applications

- How do the data quality, conformity, consistency, and completeness change across the lifecycle and hops?

Key Techniques to be Adopted for Comprehensiveness

1. Cover the Full Breadth of Metadata

For comprehensive data lineage, one needs to have an updated inventory and plan scan of all data origination and transformation sources across enterprise environments, including legacy, mainframe systems, and custom-coded enterprise and ERP applications. It is critical to leverage metadata from ETL and discovers lineage from applications even without direct access and inside firewalls and gateway(s).

2. Extraction of Deep Metadata and Lineage Across Complex Data Sources

It is imperative to remove opacity and obfuscation to view data as it traverses across enterprise landscapes and applications, typically including multiple data sources, such as on-premises data sources, data warehouses, databases, data lakes, and mainframe systems, or SaaS applications and multi-cloud environments. Visibility should be extended to also includes SQL scripts, ETL software, stored procedure codes, programming languages, and “black box” applications.

3. Leverage Artificial Intelligence (AI) and Machine Learning (ML)

AI algorithms and ML capabilities can derive data lineage even in the cases where it is impractical or otherwise too complicated or there is no direct way or means of extraction, e.g., data is being/has been moved manually through SFTP or using code. Leveraging AI and/or ML capabilities for relationship discovery and impact analysis helps comprehend the ‘black box’ relationships and conduct ‘what-if’ and ‘impact trace analysis’. AI-powered lineage discovery brings insights into “control” functions, such as logical-to-physical models and joins. E.g., if one deletes a column that is being used in a join, it can adversely impact all the downstream reports derived from that join.

Data lineage enables a comprehensive discovery and impacts analysis, especially when the relationships are undocumented, undiscovered, or dynamic.

Automatically extracting technical lineage from various sources, and keeping it updated, including the SQL queries, ETL tools, and BI solutions, helps view even the indirect relationships that influence data movements, such as conditional statements and joins. Along with metadata connectivity across the landscape, extraction needs to be able to automatically stitch and update lineage from these sources, including the metadata. Data lineage is derived from multiple sources to understand data transformations and access — including ETL jobs, SQL scripts, and stored procedures. Lineage views must be presented at different levels, from business and logical views to detailed field-level views, with the ability to drill down into transformation logic.

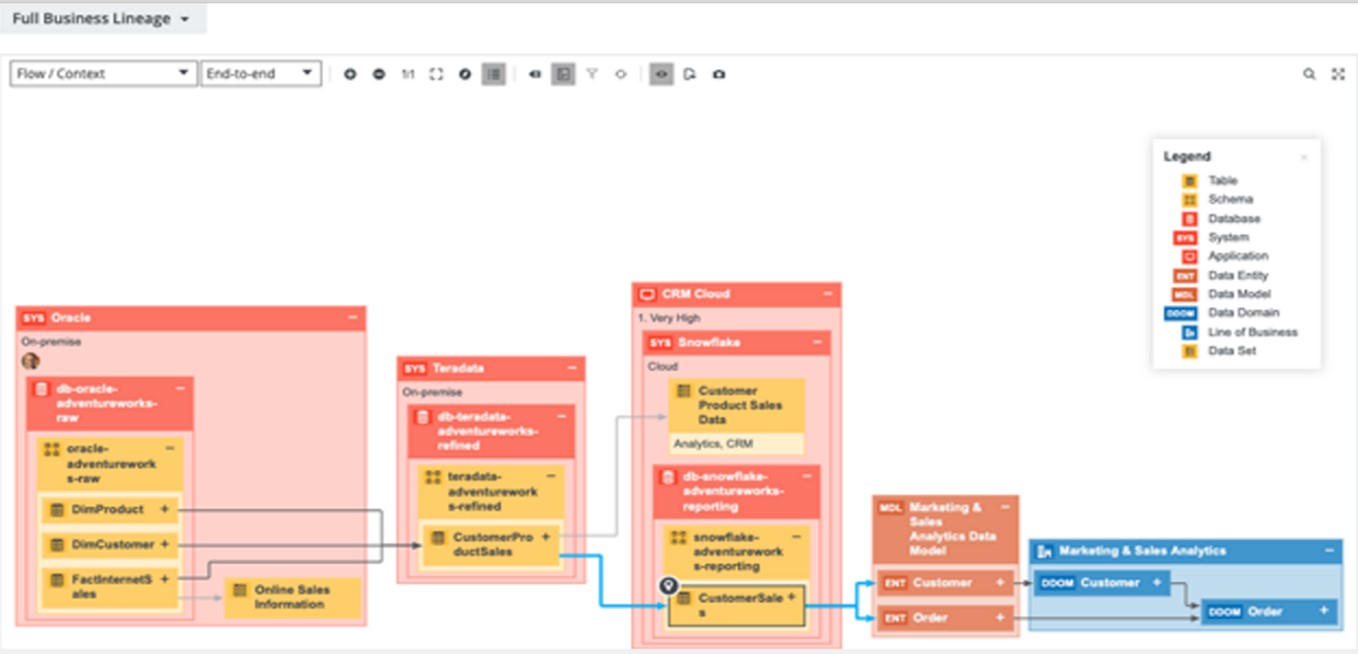

Figure 1: Representation of Data / Metadata Lineage across source(s) and application(s): Business view

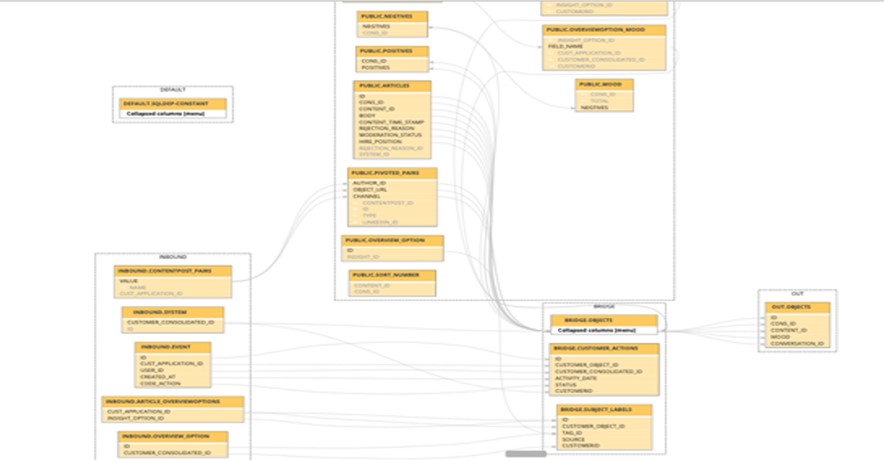

Figure 2: Representation of Data / Metadata Lineage across source(s) and application(s): Technical view

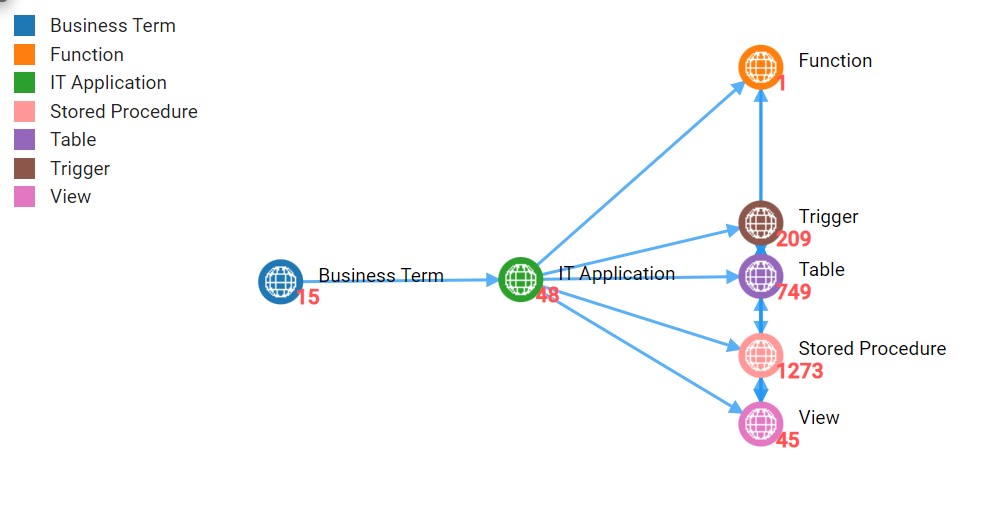

Figure 3: Representation of Data Flow across source(s) and application(s): Technical view

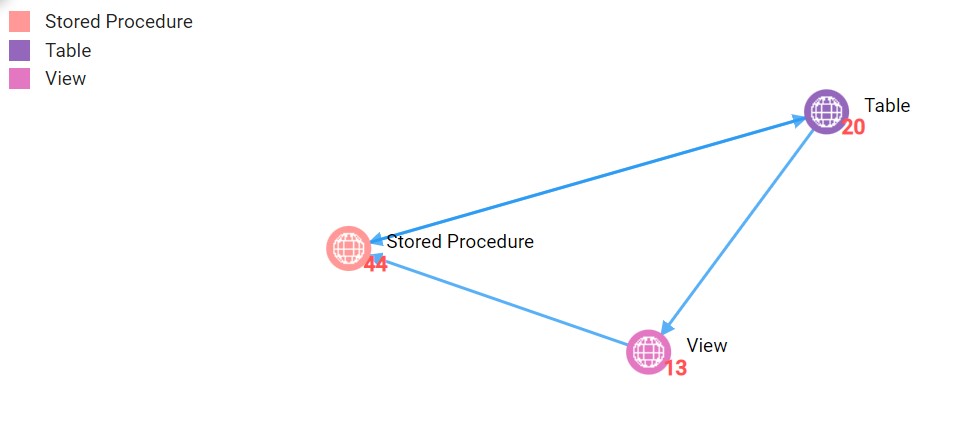

Figure 4: Representation of Data Assets Details with Lineage: Technical view

Visualizing data lifecycle helps business and IT users comprehend processes, systems, and their interdependencies. It provides a roadmap to data uniformity, integrity, timeliness, and compliance throughout the journey. To drive data-driven industry initiatives, a critical foundational requirement is to have a comprehensive view of the lineage.

Data Lineage Best Practices

Finally, a few guiding and overarching principles and best practices to be practiced during the planning and deploying data lineage –

1. Optimize Automate identification and extraction of data lineage

Manually discovering, recording, and/or capturing lineage in Excel or similar static tools is inapt for dynamic applications where data is dynamic, ever-changing, and constantly re-purposed for more customized applications.

2. Inclusiveness – ‘Scope in’ all possible and potential metadata sources

SQL ETL, databases, enterprise, and custom applications create data about data (metadata). This metadata and master data are key to understanding and controlling the data. It is essential to discover and leverage as broad metadata coverage as feasible. Leverage MDM (Master data management) techniques where feasible, and data stewardship where appropriate, to avoid surprises and ensure comprehensiveness

3. Implement incremental and recursive delta extraction of data and metadata

Continuously trace and re-trace the path taken by data through the systems and applications. Project plan all effort and resources necessary to identify, extract metadata, and master data with data lineage from each of these routes as it helps map the touch points, relationships, transformations, and most critically, dependencies among systems, applications, sources, and within the data as data meanders and caters to multiple business reports.

4. Re-check and re-Validate end-to-end processes, data flows, and hierarchical lineage using physical and logical tables

It is imperative to begin from high-level connections across source(s) and application(s) and then drill down into the connected data sets of the data elements, then cross-check and verify the transformations and stored procedures.

This needs to be an iterative procedure for all identified applications and their associated data models. There might be a relevant business requirement to create a warehouse and the dump of respective physical tables and their entities, as need be. This helps create a top-down and parallel bottom-up systematic investigation.

5. Use an organization-wide data catalogue to increase coverage of enterprise source(s) and application(s) – past, present, and planned

Always attempt to leverage AI and ML capabilities and create a master data catalogue that (semi) automatically stitches and suggests lineage from all enterprise source(s) and application(s). This enhances and automates the comprehensive extraction and inference of end-to-end data lineage and touch points from the metadata. Subsequently, plan to future-proof the exercise with an ‘impact analysis’ and/or a ’what if’ Monte Carlo tree view analysis.

Assessing lineage is imperative to assess the authenticity of the source and accuracy of the data a business is using for its computation and analysis. It makes business users susceptible to data deception and deficit, creating biases in critical business decisions. Most importantly, lineage enables the ability to take back control of data (entities) and their footprint and deliver trusted data to aid empowered business decisions.

About the Author

Tushar Subhra Das is a Senior Business Data Analyst with over 10 years of experience in Data Migration and Governance. He has worked with Europe and Australia-based Insurance and Logistics clients for Data migration, MDM and Data Quality, and process governance.

In his current role, Tushar is responsible for APAC and EMEA Data migration deployments and enhancements, including product developments for iDSS as the next-generation industry standard data management platform.

best software company in kochi