Collaborative DataOps – A “hot-button” for modern businesses

Race to embrace data to enable analytics driven digital transformation is at its peak with the explosion of data evolving at a breakneck speed as the total data created, captured, copied, and consumed globally is likely to increase from 149 zettabytes in 2024 to 394 zettabytes in 20281 . However, the companies that can effectively use these data and able to run analytics are more likely to be successful. Until now, companies have been leveraging the limited available data for operations. However, AI has opened the vast variety of data for consumption and decision making.

In our experience, we have seen that many of the digital transformation programs fail with data management being the most important contributor. It is becoming increasingly clear that traditional method of data management and governance such as disconnected decision making and static, central data strategy are not likely to bring results in today’s fast moving data world. In fact, Gartner goes to the extent of stating by 2027, 80% of data and analytics (D&A) governance initiatives will fail unless right governance strategies are deployed2.

That is exactly where DataOps, a practice popularized by Andy Palmer back in 2015, plays a role by combining technology, automation, architecture and collaborative culture helping bring transparency from source to consumer of a data product. This paper briefly discusses a nuanced mode of DataOps, which we call “Collaborative DataOps” and why and how it can minimize the risks of failure to a great extent.

Why do businesses need to embrace collaborative DataOps?

A global provider of financial market data partnered with Infosys Consulting to analyze proliferation of applications in their data and operations space, and provide a solution to make the portfolio streamlined, lean and agile. The core work of securing underlying data from various sources, processing and storing it, running analytics on top, and producing a consume-friendly data product was being performed at varied degree of efficiency. One of the fundamental reasons of these performance inconsistencies was due to different functions within the organization operating in fragmented ways, often through manual, sequential process and ad-hoc decision making without proper handshake between business and technology, thereby compromising on speed and quality.

This incident is not unique. “27% of chief data and analytics officers report that their most pressing challenge is lack of involvement and support from business stakeholders3.”

These businesses often face challenges in breaking silos and enabling collaboration between different stakeholders. This has resulted in noteworthy pitfalls such as:

I. Challenges in maintaining data quality which impacts the success and return on investment of AI initiatives. 99% GenAI adopters have encountered roadblocks with 42% of data leaders citing data quality as the main obstacle4.

II. Businesses incurring significant cost of data security breaches because of ineffective handling of data and inadequate collaboration with Cybersecurity CoEs. In 2024, data breaches globally resulted in an average financial impact of $4.88 million5 which highlights the importance of collaboration with Security teams and identification of potential vulnerabilities and training of employees on effective handling of data.

III. Risk of impacting decision making because of the challenge of managing dark data. At least 20% of a company’s data is either redundant, outdated, or trivial6, which poses the risk of generating correct insight.

DataOps, philosophy of framework, tools and processes was envisioned to eliminate these challenges. DataOps, a word borrowed from DevOps which is a concept to enable seamless handshake and greater accountability between Development and Operation. Likewise, DataOps is a similar methodology in data space to design, develop, implement, transform, operationalize and govern distributed data in the overall data life cycle.

It principally consists of

- An agile approach

- Use of automation eliminating manual tasks

- Security driven data platform

And all underpinned by a collaborative framework to help exchange information, and facilitate liaison among business stakeholders, and IT involving data engineers, analysts and stewards alike.

The collaborative DataOps essentially is a framework to help extract, curate data, expose it on a potentially microservices based architecture and generate insights leveraged and contributed by all the participating stakeholders and improved continuously over time.

The collaboration is the “keyword” as many stakeholders are party to different functions of the data lifecycle and success is pivoted by the principle of fostering communication and orchestration. This, in turn, directly impacts various efficiency and quality metrics such as data lead time, data accuracy & consistency, and overall ROI.

A framework driven approach to enable collaborative DataOps

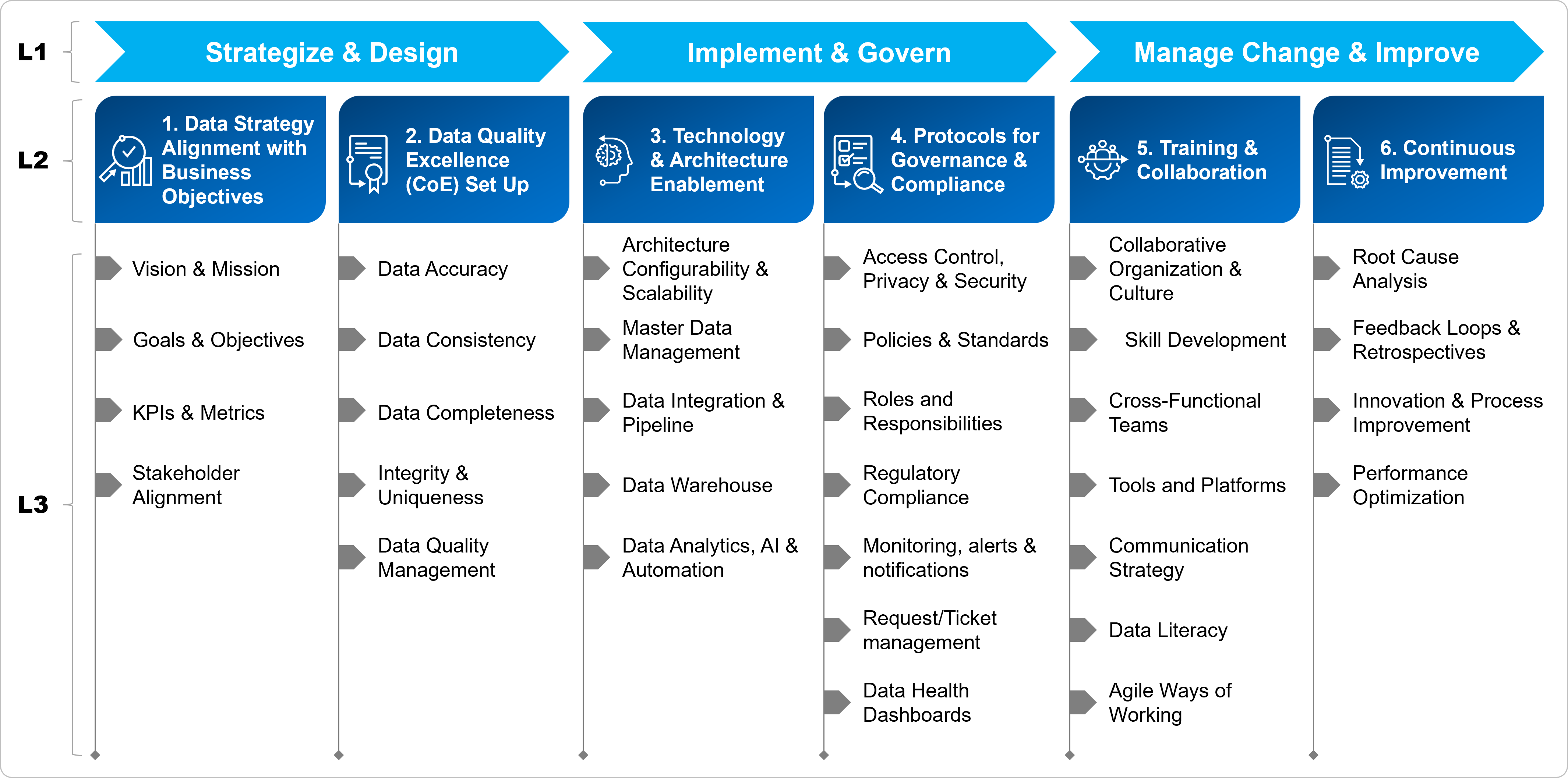

Our experience of working with leading businesses capture six key levers to establish and promote a collaborative environment for DataOps. We have derived a framework that encompasses various dimensions critical for establishing collaborative DataOps. This can serve as a critical enabler for businesses to embark on this approach and unlock the potential of data in their organizations.

Fig 1: Framework for enabling collaborative DataOps

1. Data Strategy Alignment with Business Objectives:

a. Align data strategy with business goals & objectives and enable top-down messaging to emphasize on the importance of collaboration in data operations and management.

b. Establish and link data goals & KPIs to business and individual performances.

c. Facilitate cross-functional handshaking to achieve these goals and break down silos.

2. Data Quality Excellence Group (CoE) Set-Up:

a. Establish a center of excellence (CoE) to drive the data quality management initiatives across the organization.

i. Enable CoE to define metrics & KPIs across all data quality dimensions, monitor adherence to SLAs or KPI targets and recognize achievements.

b. Focus on proactive data quality monitoring & management through alerts & notifications. Ensure issues are detected through proactive measures rather than reactive incident/ticket management.

3. Technology and Architecture Enablement:

a. Build robust data architecture with configurable and scalable technology stack

b. Build a metadata strategy to define consistent and uniform set of attributes for master data and leverage it for analytics and automation

c. Build controls throughout the data integration pipelines to minimize failures. Implement AI tools to automate processes, identify error patterns and take proactive actions

4. Protocols For Governance and Compliance:

a. Create a RACI matrix for data access control that outlines responsibilities and accountability for all parties involved in data management

b. Document policies and standards to be followed while working with data throughout its lifecycle

c. Define governance mechanism to ensure adherence to privacy, security and regulatory compliance

d. Create live data health dashboards to track and highlight discrepancies, with drill-down view to help teams in tracking their performances.

5. Training and Collaboration:

a. Create training materials such as Standard Operating Procedures (SOPs), best practices, checklists and FAQs for enabling teams to follow the right process

b. Conduct regular training workshops for skill development and improve data literacy. Emphasize the importance and guide teams on data quality management

c. Encourage collaboration across teams enabled by communication tools and shared platforms with agile ways of working

6. Continuous Improvement:

a. Establish well defined feedback loops. Conduct retrospectives to monitor target metrics and KPIs

b. Perform root cause analysis of recurring issues, analyze the trend and define action items with clear timelines for areas that need improvement

c. Establish an on-going process for continuous improvement and performance optimization through innovation

While businesses have started to embark on the journey of collaborative DataOps, its effective enablement requires a framework driven approach for realizing envisaged outcome. We have helped a few global brands to alleviate some of their challenges in data operations and management. A large US based financial services company streamlined its data operations through design, implementation and adoption of an integrated operating model with a set of meaningful metrics leveraging the stated framework. The business benefits delivered were reduction in operating cost through improved productivity, increase in efficiency due to process standardization and effective decision making because of improved data quality.

As data continues to be crucial to fuel high performance AI, investing in well-defined frameworks is essential for organizations seeking to optimize their data strategies & operations, enabling innovation, and staying ahead in competition.