Sustainable AI integration balances profit with responsibility, addressing ethical dilemmas, biases, and environmental impacts. It emphasizes the need for strategic, responsible AI practices to ensure long-term societal well-being and business success.

Insights

- AI has the potential to significantly amplify human capabilities, but it also carries inherent biases and risks that need to be carefully managed.

- Algorithmic bias is a serious concern as it can result in discriminatory outcomes, affecting fairness and equality.

- Sustainable AI practices aim to balance profit with responsibility, ensuring that ethical considerations are not overlooked.

- The misuse of AI technologies poses significant threats to privacy and security, highlighting the need for robust safeguards.

- Implementing ethical AI practices is crucial for achieving long-term success and maintaining public trust in AI systems.

Artificial Intelligence (AI) has emerged as a new-age transformative force across industries, promising unprecedented opportunities and profit generation. No different from others, the telecom and high-tech sectors too stand at the forefront of a technological revolution and are poised to have their core operations and business models fundamentally reshaped by AI. These sectors, already driving innovation in communication and internet services, deeply impact our daily lives — how we live, work, learn, and interact with the world. Driven by the allure of enhanced efficiency, personalized customer experiences, and innovative solutions, these industries are rapidly adopting AI across various functions. However, AI serves as an amplifier of human potential and becomes deeply intertwined with the biases, values, and strategic choices embedded throughout its entire life cycle — from initial ideation and data collection to model training, deployment, and ongoing monitoring. This life cycle, rather than just the training data or design, becomes a critical lens through which we can understand how human influence shapes AI’s outcomes — and this presents a significant risk.

This dark side of AI manifests in various forms, including algorithmic bias leading to discriminatory outcomes, while privacy and IP violations can stem from unauthorized data collection and the opaque nature of some AI models. Poorly planned AI strategies can cause job displacement. Furthermore, the potential for AI misuse — including mass surveillance, the facilitation of cross-border terrorism through sophisticated communication and planning tools resulting in increased geopolitical tensions, and the development of lethal autonomous weapons systems — pose significant threats to individual liberties and global security, raising complex ethical dilemmas. Critically, the massive energy consumption required for running large AI models also poses a threat to the environment and potentially contributes to climate change. These potential pitfalls arise from tensions between competing priorities and underscore the urgent need for sustainable AI practices.

Persistent tensions: Balancing paradoxes

The critical concept of sustainable AI integration argues that balancing profit with responsibility is not merely a moral imperative but a strategic necessity for long-term profit creation. The business world often focuses heavily on increasing profits, with risk mitigation taking a backseat. This profit-driven mindset turns oblivious to risks, which makes it challenging for businesses to thrive through the test of time. When aiming for sustainable AI that maximizes value and minimizes risk, organizations commonly face four paradoxical tensions: organizing, performing, learning, and belonging. Integrating AI creates new tensions within the existing business structures, processes, and roles. This is not a simple trade-off but rather a continuous process of managing these tensions.

Organizing: To increase the speed of innovation, business units are often seen running their pilots and proof of concepts, which promotes healthy competition. The challenge lies in establishing effective centralized governance to ensure alignment, prevent duplicated efforts, and maintain consistent standards. The paradoxical tension operating between speed and governance leads to a key risk — even with initial centralization, governance can drift back to decentralization, recreating the problems of misalignment and redundancy.

Performing: This tension is about balancing short-term performance with long-term goals. If a firm prioritizes quick AI deployment without considering data quality and algorithm biases, it may achieve short-term gains but neglect long-term goals. This also exposes it to immediate risks like model degradation, systemic bias, the chances of the model becoming a black box, and missed opportunities. Furthermore, ethical lapses or regulatory violations due to inadequate audit of AI use cases throughout the life cycle can result in hefty fines, legal battles, and severe reputational damage, eroding customer trust and impacting brand value, causing the organization to fall behind its competition and lose market share and long-term viability.

Learning: This tension involves balancing the exploitation of current knowledge with the exploration of new opportunities. Introducing AI puts pressure on teams who are already managing existing operations. They must both maintain current operations using their existing skills (exploitation) and acquire new AI-related skills (exploration). This creates a significant challenge: how to provide adequate training and a conducive learning environment without further burdening the team, which is often already stretched?

Belonging: This tension is about balancing individual identity with collective goals. Large-scale AI adoption creates anxiety about job losses, pitting managers’ and employees’ job security against the organization’s pursuit of AI-driven efficiency. However, the goal shouldn’t be simply replacing people with machines, but rather retaining valuable employees by investing in upskilling and reskilling initiatives. This empowers them to transition to new, higher-value roles that complement AI, fostering a more adaptable workforce and contributing to a thriving economy in the long run. But are organizations thinking this way?

Having discussed organizational paradoxes in sustainable AI adoption, the tension between formal and substantive rationality becomes central. Formal rationality prioritizes efficient, quantifiable outcomes, potentially neglecting ethical and social considerations addressed by substantive rationality. One’s intention to use sustainable AI directly addresses this tension, recognizing that true sustainability requires integrating both forms of rationality. By prioritizing ethical considerations and societal impact alongside performance metrics, we should aim to develop AI systems that are not only effective but also responsible and aligned with long-term societal wellbeing.

Resistance due to the paradox effect: The adoption challenge

If not checked, it is precisely these paradoxical tensions that underlie many of the adoption challenges and resistance, encountered when implementing AI. The perceived susceptibility of AI systems to data poisoning, adversarial attacks, data drift, and black box problems, stemming from bias and manipulation, undermines trust in their objectivity. The fear of severe negative consequences — such as poor investment decisions, lost revenue, job displacement, discriminatory outcomes, and reputational damage — creates widespread apprehension. This anxiety leads to resistance to AI adoption, preventing organizations from fully harnessing its potential benefits. On top of this, these internal organizational dynamics are mirrored by similar anxieties and concerns among external stakeholders, notably customers, creating a broader landscape of resistance to AI adoption. Customers resist AI adoption due to fears about data privacy, security, and misuse. They worry about data leaks, unauthorized use of personal information, potential surveillance, and lack of transparency in data processing. Concerns also include AI-enabled malpractices like algorithmic discrimination, personalized pricing, price gouging, price steering, predatory pricing, misinformation spread by bots, deception, and increased risk of fraud, impacting trust and hindering AI’s acceptance.

AI stories gone wrong: The fear is real

And to note, these fears are not operating in a vacuum! Recent history is replete with examples of threats that have fueled concerns in the information age. For instance, in 2023, LastPass, a popular password management service, suffered a significant security breach that exposed encrypted user vaults, raising serious concerns about the security of third-party data storage. A study by the University of Massachusetts Amherst (2019) estimated the energy consumption and carbon emissions associated with training several common NLP models and found that training one large language model could produce as much carbon dioxide as 125 round-trip flights between New York and Beijing. In late 2024, the Texas Tech University Health Sciences Center experienced a cyberattack potentially exposing the data of 1.4 million patients. In early 2023, Samsung restricted employee use of generative AI like ChatGPT on company devices and networks after sensitive internal data was uploaded to the platform, raising data leak concerns. Furthermore, the classic case of COMPAS, used by US courts to assess the likelihood of a criminal defendant reoffending was falsely flagging Black defendants as high-risk compared to white defendants. Similarly, Amazon’s AI tool for recruitment was biased against female candidates causing non-selection. These incidents place immense pressure on organizations to not only address internal operational challenges but also to assuage growing public concerns and maintain customer trust.

AI regulations and guidelines: Foundation to risk-free AI

Recognizing the need for clear guidelines and safeguards, policymakers and regulatory bodies worldwide have developed numerous acts and policies to guide AI development and application.

The Bletchley Declaration, signed at the UK’s AI Safety Summit held at Bletchley Park on November 2023, establishes a shared international understanding of frontier AI’s risks and opportunities. The EU’s AI Act is a recent attempt to broadly regulate AI, focusing on risk levels. It prohibits certain high-risk AI practices (like real-time biometric ID in public) and imposes strict requirements (e.g., conformity assessments, data quality, human oversight) for other high-risk systems in areas like healthcare, transportation, and employment. Furthermore, the NIST AI Risk Management framework provides a structured, seven-step approach to managing security and privacy risks for information systems, offering valuable guidance for mitigating bias, manipulation, and other potential harms associated with AI. Organizations like the World Economic Forum and initiatives like the Data Protection and Digital Information Bill are contributing to the development of global norms and standards for responsible AI. The OECD Principles on AI promote responsible, human-centered AI globally. These diverse efforts reflect a global move towards establishing comprehensive frameworks for responsible AI. The GDPR stresses data privacy and protection, including the “right to be forgotten” and data portability. The CCPA/CPRA (US) emphasizes data privacy and consumer rights, granting Californians control over their data. Singapore’s Personal Data Protection Act (PDPA) establishes a baseline standard of protection for personal data in Singapore by governing the collection, use, and disclosure of such data by organizations. The Digital Personal Data Protection Act (DPDPA), India’s data protection law governs the processing of digital personal data in India, both by government and private entities. It is a consent-based framework that advocates data minimization and purpose limitation while upholding citizens’ rights, including the right to access, correct, and erase their data. India, with its ambitious AI for All vision, has been actively developing its national AI strategy, spearheaded by NITI Aayog for social and economic development across sectors like healthcare, agriculture, and education.

From principles to practice: Navigating the paradox

While these acts and policies provide a valuable foundation, a gap often exists between high-level principles and their practical implementation within organizations. We have taken a step towards adopting these principles internally and extending them externally through our Responsible AI office. With the completion of ISO 42001 certification, we are determined to ensure a sustainable journey as an AI-first organization.

Recognizing the need to address AI’s dark side and navigate inherent paradoxical tensions — we don’t shy away from the inherent complexities and contradictions that AI brings; instead, we actively acknowledge them. The first step towards building sustainable AI is the acceptance that these tensions exist. Rather than prioritizing one side of a paradox over the other, we seek to integrate both, developing strategies to manage these tensions rather than attempting to eliminate them. Our goal is to foster a mindset that values both — for example, encouraging decentralized innovation while centralizing oversight, or balancing the need to maintain current operations with the need to explore new AI capabilities.

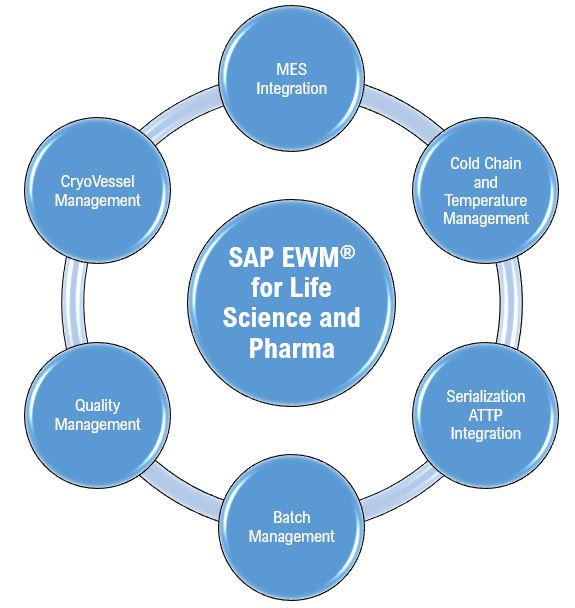

Our approach to responsible AI begins right from the identification of the use case where we use our risk impact assessment framework and AI canvas to find out potential risks that could emanate through the model. Then we devise the mitigation strategy for potential risks across people, processes, and technology. These mitigation strategies then guide ongoing audits of the AI model throughout its life cycle. We have codified our sustainable AI technical guardrails across fairness and bias, privacy, security, safety, and explainability, which are used throughout the AI life cycle and consistently try to enhance our guardrails based on global regulation. In a move to improve data center energy efficiency and environmental sustainability, we have partnered with one of the largest oil and energy providers to promote immersion cooling technology.

Embracing the learnings, we try to enhance our client outcomes in their AI journey. While working for one of the largest utility organizations in India we added long-term value to their business model with our customer justice framework. Derived from anthropology and blended with the excellence of AI – it demonstrates a commitment to fairness and accountability in customer grievance redressal. Beyond addressing issues like profanity detection and enhancing user experience, a key element in this model is human-in-the-loop. This ensures accountability and prevents unchecked autonomy by providing clear escalation paths to human representatives for dissatisfied customers. This ethical focus has boosted both employee satisfaction and customer trust. For an Asia Pacific client, we developed a robust target operating model to embrace sustainable AI at scale and a change management action plan to make the transition successful.

We have also partnered with the British High Commission to provide strategic input to the UK and Indian governments on the AI Opportunities Action Plan 2025-2030. To promote sustainable AI awareness and adoption in industries, the public sector and society, we are taking forward this strategic alliance to promote inclusivity by alleviating barriers such as culture, language and education, and caste, class and gender barriers stemming from ethnocentrism and deprivation. To achieve this, we are actively working with policymakers, academia, and experts from domain ethics, law, anthropology, and technology, through round tables, panel discussions, hackathons, and knowledge-sharing sessions.

From words to action: Embark on the journey

The true efficacy of sustainable AI frameworks, guidelines, and even emerging legal and policy frameworks hinges on their practical implementation and adoption by businesses and the society. Without a genuine intention and commitment to embedding these principles into core business practices and organizational culture, even dedicated sustainable AI initiatives might fail to mitigate the real-world harms of unchecked AI development, reducing them to mere window dressing. More concerningly, without leadership commitment and consistent oversight, these sustainable AI initiatives could become opaque and unaccountable, effectively making the structure a “black box” that contradicts its intended purpose. The fate of Enron in 2001, a company once lauded for innovation but ultimately undone by unsustainable practices and a lack of transparency, serves as a stark reminder of the devastating consequences of prioritizing short-term gains over long-term stability and ethical conduct. The Facebook-Cambridge Analytica scandal, where millions of users’ data were harvested without consent for political campaigning, resulted in a $5 billion FTC fine, eroded user trust in the brand, and exposed the personal identifiable information (PII) of millions to risk. Does this not serve as a stark wake-up call for organizations to integrate sustainable AI and avoid severe repercussions?