Executive Summary

In today’s rapidly evolving technological landscape, the integration of advanced AI systems with robust ethical frameworks has become paramount. Infosys, a global leader in next-generation digital services and consulting, offers the Responsible AI Suits, part of Infosys Topaz to help enterprises balance innovation with ethical considerations, such bias and privacy prevention, and maximize their return on investments. Responsible AI (RAI) frameworks developed as part of this include tools, best practices and guardrails enable organizations to be responsible by design by adopting these across AI use case lifecycle. Recently, we also open-sourced the Responsible AI toolkit that enhances trust and transparency in AI.

Extending the above Responsible AI guardrail capabilities to the dataset curation side, we planned to identify and mitigate the challenges such as propagation of bias, profane, and privacy content in the data that could be used in model training and fine-tuning. The solution design involved integration of unique responsible AI techniques and model integration with NVIDIA NeMo™ Curator, part of the NVIDIA Cosmos™ platform, to cleanup data in the pre-modeling, pre-finetuning, and RAG pre-data ingestion stages.

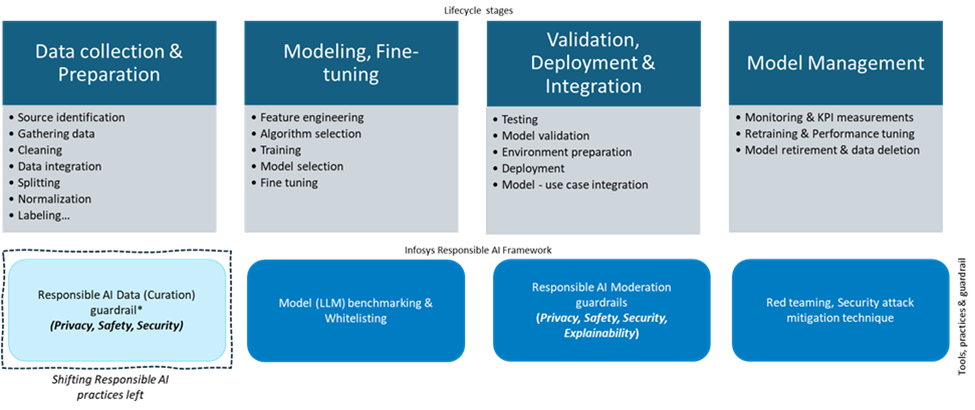

Applying Responsible by Design principle across the AI Lifecycle

AI lifecycle can be classified into four main stages:

- Data collection & preparation,

- Modeling & fine-tuning,

- Validation, deployment & integration, and

- Model management.

Figure 1 shows various Infosys tools, best practices and guardrail mapping to the AI lifecycle stages. Our focus in this blog is to discuss the responsible AI data curation guardrail and its integration with NVIDIA NeMo Curator.

Figure 1: Responsible by design across the AI lifecycle

Responsible AI Data Curation Guardrail: Classifiers & Filters

During the Data Collection & Preparation stage, responsible by design framework focuses on ensuring dataset curation with attention to bias, safety, and privacy contents. The framework also supports language quality and ethical checks on large documents/artifacts before ingesting them (into vector DB) in the RAG pipelines. The guardrail helps identify and remove the unethical content during data curation before AI model training, finetuning activities or in RAG pipeline.

NVIDIA NeMo™ Curator

NeMo Curator improves generative AI model accuracy by processing text, image, and video data at scale for training and customization. It also provides pre-built pipelines for generating synthetic data to customize and evaluate generative AI systems.

With NeMo Curator, developers can curate training data used for model preparation for various industries.

It consists of Python modules and uses Dask to scale several features such as

- Data download and extraction

- Text cleaning and language identification

- Quality filtering

- Domain classification &

- Deduplication

- Streamlined scalability

By applying these modules, organizations can process large scale unstructured datasets to curate high-quality training or customization data . Features like document-level deduplication ensure unique training datasets, leading to reduced pretraining costs. With NeMo Curator, developers can achieve 16X faster processing for text and 89X faster processing for video when compared to alternatives .

Infosys Responsible AI Data Guardrail Integration with NVIDIA NeMo Curator

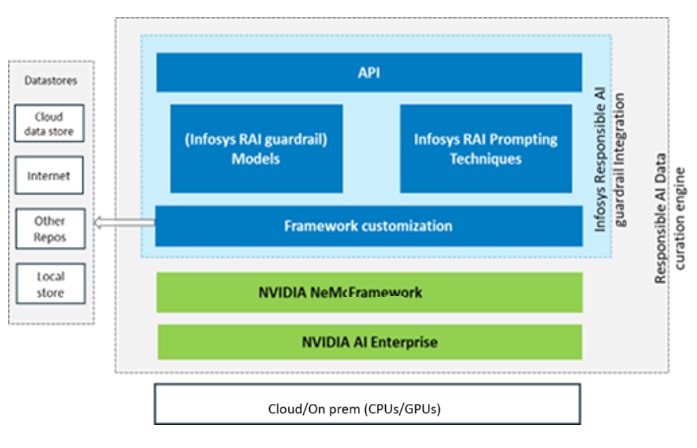

Figure 2 shows the block diagram of our Responsible AI Data Guardrail integration with NeMo Curator. This guardrail is intended to address bias, profane, unsafe and privacy content in large training datasets (or large document). Data guardrail uses unique prompting technique and fit to purpose and/or finetuned models. It leverages NeMo Curator for accelerated data processing. This integration also involves customization in the NVIDIA NeMo framework code to deploy the model and prompt templates.

Figure 2: Infosys Data Guardrail integration with NVIDIA NeMo Curator

- Infosys Responsible AI guardrail – built on a set of core tenants that ensure AI systems operate ethically and responsibly

- Infosys RAI guardrail Models – open source, fit to purpose LLM (with lesser parameters) and/or fine-tuned model to identify issues relevant to RAI tenants (bias, safety, privacy)

- Infosys RAI prompting Technique – unique proven prompting techniques to identify, score & mitigate issues in the datasets that are relevant to RAI tenant

- Framework customization – to support (but not limited to) guardrail model integration, prompt customization and access bulk data stores

- NVIDIA NeMo Curator- designed to process large-scale data for training purpose

- GPU – Infosys Responsible AI guardrail and NVIDIA NeMo Curator requires GPU for data curation

- Datastores – works with local, cloud and other bulk data storages to access the dataset for curation

- API – supports API-based consumers, an optional layer to access data guardrail. However, for data processing- python code can be used directly without API

Figure 3 shows how the Infosys Responsible AI’s Data guardrail being used in AI pipeline involving modeling. RAI tenants including bias, safety, and privacy are considered based on their applicability and importance in the dataset curation process. The guardrail allows users to choose the specific tenant to process based use case requirements. For example, given use case may need to retain or eliminate one or more types of biases (like gender bias or age bias or racial bias etc.,) in the training dataset based on the requirement. These tenant details to retain or to eliminate are passed as simple configurations.

Figure 3: Infosys Responsible AI Data guardrail functioning in AI Pipeline

Unique Features of the Responsible AI Data guardrail

Infosys Responsible AI Data guardrail provides features to process varied data types, including unstructured text, structured data and multimodal data (image, video, audio).

- Privacy: For image and video data, image privacy (DICOM Privacy) and video privacy, code privacy for codes ensuring that all forms of sensitive data are safeguarded.

- Biasness: Group fairness, Individual fairness, bias detection & mitigation, and text fairness evaluation, which are critical in promoting fair and equitable AI outcomes.

- Safety: Image safety categorization, and adult content detection to protect users from harmful content.

Conclusion

Through the integration of Infosys’s Responsible AI guardrails with NVIDIA NeMo Curator, we leverage best of both frameworks to enhance the quality of the curated datasets., Infosys’ guardrails address key aspects like bias and model safety, ensuring datasets are fit for purpose and adaptable to various data types (structured, unstructured text, and images). Meanwhile, NeMo Curator accelerates data processing and can scale to multi-node multi-GPU systems.