For years, text-to-speech (TTS) has been a workhorse technology, transforming written words into spoken form. It’s a staple in e-readers, translation tools, and accessibility applications. But there’s a crucial element missing: Emotion. Flat, emotionless narration can leave listeners disengaged, especially when it comes to creative content like audiobooks or educational materials.

Text-to-speech with emotion could be a concept, poised to breathe life into written words. Imagine a storybook coming alive, the narrator’s voice not just reading the words, but capturing the thrill of the chase, the warmth of a hug, and the chilling fear of facing a monster. This is the power of text-to-speech with emotion, and it will change the way we experience text.

How Does it Work?

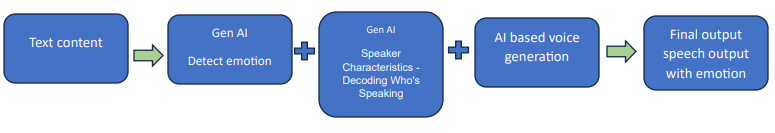

So, how does text-to-speech with emotion translate words of emotion? Here’s a breakdown of the underlying approach:

- Emotion Detection: This is where the Generative AI (Gen AI) comes in. At its core, Gen AI models are often deep neural networks (DNNs) trained on massive amounts of text data annotated with emotional labels. These models can analyze the text sentence by sentence, identifying the emotions present. Is it a lighthearted joke? A heart-wrenching goodbye? The DNN considers factors like word choice, sentence structure, and sentiment analysis to understand the emotional undercurrent of the words.

- Speaker Characteristics – Decoding Who’s Speaking: Text-to-speech with emotion goes beyond just conveying feelings; it also considers the characteristics of the speaker. Here’s how Gen AI can identify who’s speaking in a written passage:

- Context Clues: Language can offer hints about the speaker. Pronouns like “he” or “she” can indicate gender. References to age or actions (“The young boy ran…”) can provide clues about the speaker’s age. The Gen AI model can be trained to recognize these linguistic cues and use them to infer the speaker’s demographics.

- Dialogue Analysis: In conversations, identifying the speaker becomes even more crucial. Gen AI can analyze dialogue structure, attribution tags (“John said…”), and character descriptions to determine who is speaking at each point. This allows for dynamic changes in voice characteristics throughout the text.

- Style and Tone: Writing style can sometimes offer clues about the speaker’s age or background. Formal language might suggest an older speaker, while slang or informal language might indicate a younger person. The Gen AI model can be trained to identify these stylistic variations and use them to infer speaker demographics.

- Voice Generation: Once the Gen AI model identifies the speaker’s gender and age (or other relevant demographics), it can use this information to select an appropriate voice profile from the voice generator model’s library. This profile will include pre-recorded audio samples or learned characteristics associated with speakers of a certain age and gender. Armed with this information, the system feeds it to a voice generator model. These models are often variations of WaveNet, a deep learning architecture specifically designed for raw audio waveform generation. Trained on vast amounts of audio data, these models can not only produce speech that accurately delivers the words but also capture the intended emotion. Imagine a voice trembling with fear or one bursting with excitement, all based on the emotional analysis from the Gen AI model. The WaveNet model can manipulate parameters such as pitch, speaking rate, and spectral envelope to create natural-sounding speech inflections that convey the desired emotion. We can rely on cloud-hosted services like Azure Speech Studio or open-source models like BARK.

Beyond the Basics: Pushing the Boundaries

While this core approach offers a solid foundation, there’s room for further exploration and refinement:

- Emotional Nuance: Human emotions are complex and multifaceted. Can the AI identify the intensity of an emotion (content vs. ecstatic)? Can it handle sarcasm or mixed emotions (like bittersweet joy)? Future advancements in Gen AI will focus on capturing these subtleties, potentially through incorporating additional training data with more nuanced emotional labels.

- The Art of Natural Delivery: While voice generators can produce emotional tones, perfecting natural-sounding inflections and variations is a continuous challenge. Imagine the subtle rise in pitch for a question or the slowing down for emphasis. Investing in this area will strive to create truly human-like emotional delivery, potentially through exploring techniques like adversarial training where the model discriminates between synthetic and real human speech with emotions.

- Data is Key: The accuracy of both Gen AI and the voice generator models relies heavily on the quality and quantity of training data. The more diverse and emotionally rich the data, the better the models can learn and perform. This highlights the importance of creating extensive datasets of text annotated with various emotions and corresponding high-quality audio recordings of human speech expressing those emotions.

A Multimodal Approach: Beyond Words

Adding another dimension to emotion detection can further enhance the system:

- Acoustic Features: Speech characteristics like pitch, speaking rate, and pauses can provide valuable cues for emotional delivery. A faster pace with a higher pitch might indicate excitement, while a slower, quieter tone could suggest sadness. Analyzing these features with tools like Mel-Frequency Cepstral Coefficients (MFCCs) can add another layer of information for the AI.

- Multimodal Cues: Text alone doesn’t tell the whole story. Looking beyond just words, punctuation marks (exclamation points, question marks), capitalization, and even emojis (if applicable) can offer valuable clues about the intended emotion. Integrating these elements into the analysis can give the AI a more complete picture.

- User Control: The Power of Choice, Human in the loop – Imagine having some control over the emotional delivery. Choosing between a subtle or dramatic narration depending on the content or adjusting the speaker’s age to better suit the story. User control can add a level of personalization and enhance the overall experience.

The Future of Storytelling: Where Text Meets Emotion

Text-to-speech with emotion has the potential to revolutionize the way we interact with text. Here’s a glimpse into the possibilities that lie ahead:

- Audiobooks that transport you: Immerse yourself in a story with a narrator who captures the full spectrum of emotions, from laughter to tears. Imagine a child’s bedtime story narrated with a gentle, soothing voice or a thrilling adventure delivered with a voice brimming with excitement and suspense.

- Engaging e-learning experiences: Learning doesn’t have to be dry. Imagine educational modules that come alive with emotionally charged narration that personalizes the learning experience and helps students retain information more effectively. A science lesson about the wonders of the natural world narrated with a sense of awe can spark a lifelong curiosity in young minds.

- Voice assistants that understand you: Imagine interacting with a voice assistant that not only understands your words but also responds with empathy. A virtual assistant that can offer words of encouragement on a difficult day or celebrate your achievements with a touch of joy can make everyday interactions more meaningful.

Conclusion: A Brighter Future for Text

Text-to-speech with emotion is still evolving, but the potential is always present. By combining the power of Gen AI, voice generation, and a focus on user experience, we can create a future where the text comes alive, brimming with emotional depth and fostering a deeper connection with the written word. This technology has the potential to enhance learning, entertainment, and communication in countless ways. As we continue to develop and refine this technology, we can look forward to a future where text not only informs but also truly moves us.