The last few decades have seen tremendous technological advancements in the domain of data storage and processing. There has been a huge leap in terms of the volume and velocity of data processed by systems. The change from value inherent purely in transactions (say the payment made at the end of a purchase) to the value generated through engagement data (clicks, time spent, page views) has increased the volumes of data processed by several orders of magnitude. Evolution in database technology has greatly enabled this change and NoSQL databases have made it possible to efficiently process this magnitude of data.

Google Cloud leads the revolution through a variety of databases available on its cloud platform. In this blog, let us take a deeper look at Google Cloud Bigtable and how it helps organizations process large data volumes (terabytes to petabytes).

Bigtable: Built for Scale

Cloud Bigtable, launched publicly in 2015 on Google Cloud, is a highly scalable implementation of the key-value system of storing and retrieving terabytes to petabytes of data. The design of Cloud Bigtable was made public by Google, following which it inspired a family of high-performance databases like Apache Cassandra and HBase.

Cloud Bigtable stores data as a map of keys and corresponding values. Data is organized into tables that are composed of rows, each of which describes an identity (key) and multiple columns that may be grouped into column families[1] (it is not an RDBMS and therefore does not support joins, SQL Queries, or multi-record transactions).

Cloud Bigtable is great for workloads that are large-scale and have high throughput, such as IoT, advertising, clickstreams, and streaming data. Common use cases include:

- IoT data: Data generated by IoT devices can be stored using time-series style schemas

- Advertising: Ad impressions and related high volume data can be efficiently stored and processed

- Streaming data: Bigtable is a good choice for storing data that streams over Kafka or Cloud Pub/Sub. This data can then be analyzed using Cloud Dataflow or Cloud Dataproc

Cloud Bigtable has been proven at scale. It powers planet-scale systems like Google Search, Gmail, Google Maps, and Google Analytics. As a managed service, it removes the challenges of day-to-day management, allowing enterprises to focus on providing a superior experience to their end users.

Infosys Solution for High-throughput Systems

The challenge

A large services company was running a mission-critical system that processed over 3500 transactions per second (tps) on mainframes. They wanted to replace it with a modern, modular system that could scale out to manage a growing workload without a corresponding linear increase in IT costs.

The legacy system built several decades ago had several cost, scalability, and maintainability challenges such as:

- The old C/C++ and legacy RDBMS-based platform was difficult to change due to its monolithic nature

- It was difficult to procure the skill base needed to maintain the legacy systems

The client needed a system architecture that would support a growing volume of up to 4500 TPS and the ability to retain data for 2 years, with a data size of over 10 TB.

The solution

Infosys helped the client migrate to a modern Java-based system that leveraged the power of Bigtable. We chose Google Cloud as the platform to host the modernized application and Google Kubernetes Engine (GKE) to host the services that processed the messages streaming over Pub/Sub.

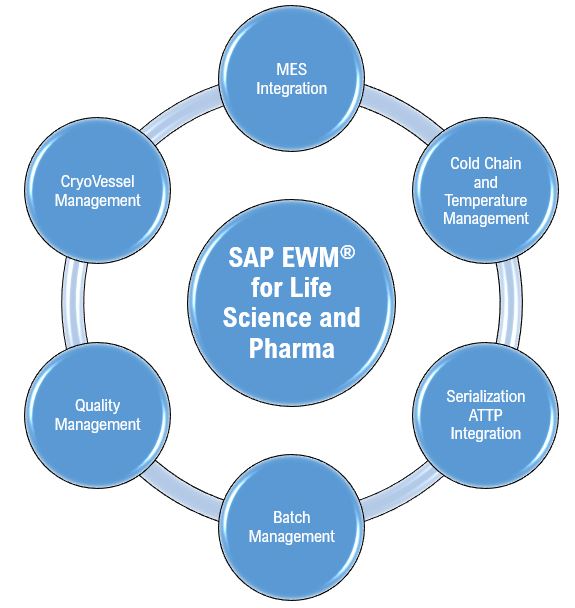

Figure 1 High-level solution architecture

The Infosys solution leveraged Cloud Bigtable as the central repository for storing messages. Five tables were created to hold the messages as business transactions moved from one stage to another. The keys of the tables were carefully designed to allow for efficient retrieval of messages based on business fields and time of arrival. The cluster was designed to auto-scale based on the volume of messages being ingested.

Scalable implementation allowed the system to achieve an ingestion rate exceeding 6000 transactions per second during a benchmarking exercise. This gave the client confidence that the platform could handle future business growth. The solution helped reduce the total cost of ownership of the system by retiring the on-premises hardware and database.

Infosys Google Cloud, Partnership for Database Solutions

Infosys has partnered with Google Cloud, to bring the power of Bigtable and other database solutions to our clients. Bigtable has helped us solve some of the most demanding scalability and throughput challenges faced by our architects while designing systems.

Cloud Bigtable provides best-in-class market predictable performance, with a focus on consistency and latencies. With Bigtable’s rock-solid reliability and exposure to multi-region failure scenarios, Infosys can rely heavily on Google’s databases to build solutions that strengthen customer trust and confidence

Infosys Modernization Suite

Infosys Modernization Suite, part of Infosys Cobalt, simplifies and accelerates application modernization to the cloud. The Infosys Database Migration Platform, which is part of Infosys Modernization Suite, brings hyper-automation to every stage of database migration to Google Cloud,. Platform features include:

- Automated Assessment – A tool-based assessment to determine the feasibility of migrating the existing portfolio of databases to Google Cloud, services. It also helps prioritize the databases based on the complexity and feasibility of migration.

- Impact Analysis – Automated analysis to identify the changes required to make the schema, data and code compatible with the target database service. This drives the schedule and effort estimates for the actual migration.

- Schema and Code Conversion – Automated and assisted conversion of the schema and associated code (stored procedures, functions, packages, etc.) using Infosys ML-based conversion.

- Data Migration – Seamless migration of large volumes of data. The platform allows parallel execution of migration streams and complements the capabilities of Google Cloud, DMS.

- Automated Data and Regression Testing – Automated testing of outputs of procedures, functions, packages etc. post migration gives up to 40% reduction in data testing effort and ensures completeness and accuracy of data migration. The testing can be repeated on data loaded in increments as part of a migration.

- Data Masking – Enables testing with live data in lower environments while not compromising on security and privacy requirements.

Bigtable’s 99.999% availability, processing more than 5 billion TPS and >10 Exabytes of data under management, gives us the confidence to adopt it as a primary data store for critical use cases at our clients. Google’s new Database Migration Program would help us accelerate these innovations. Here are some details of the program.

[1] Bigtable overview | Cloud Bigtable Documentation | Google Cloud