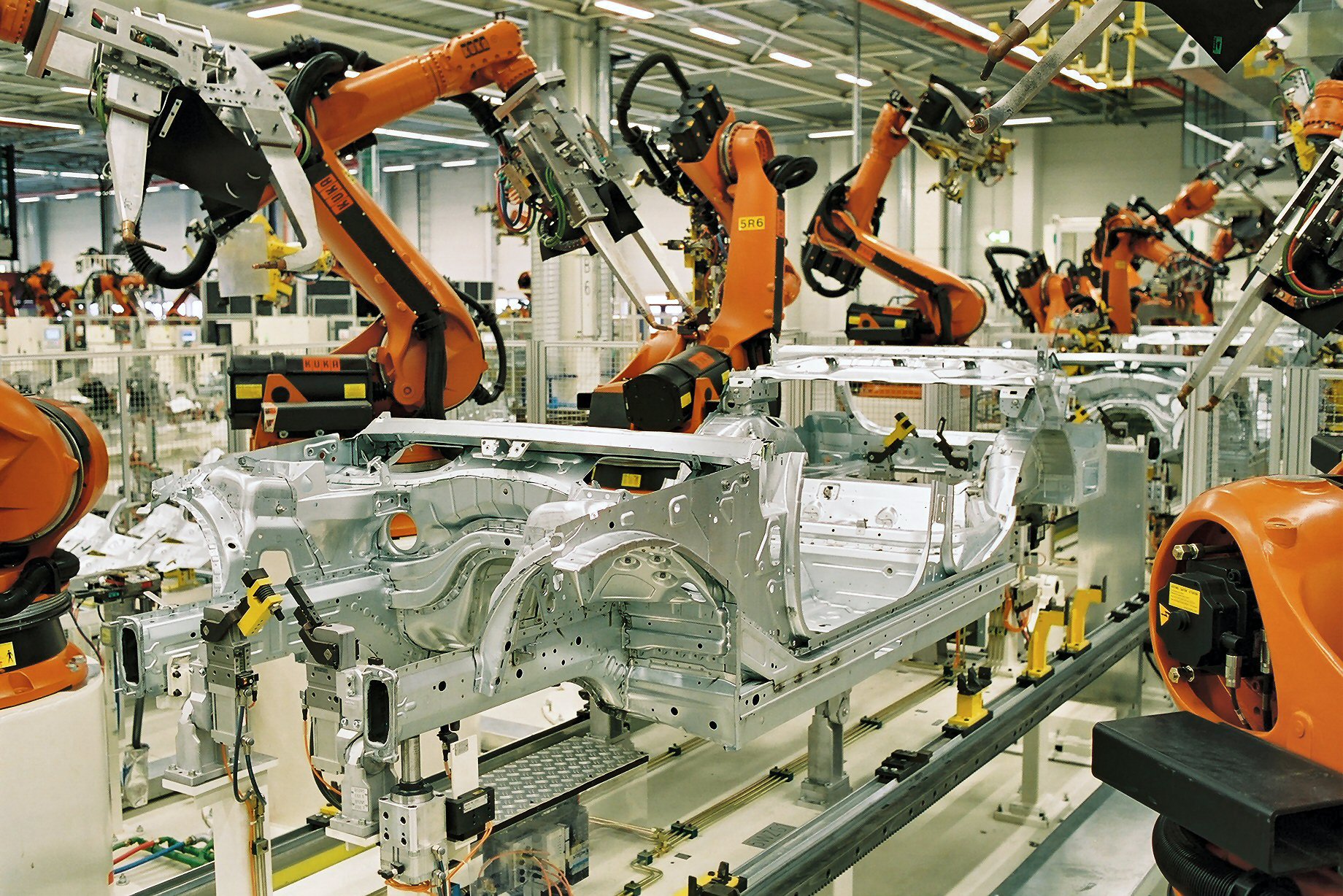

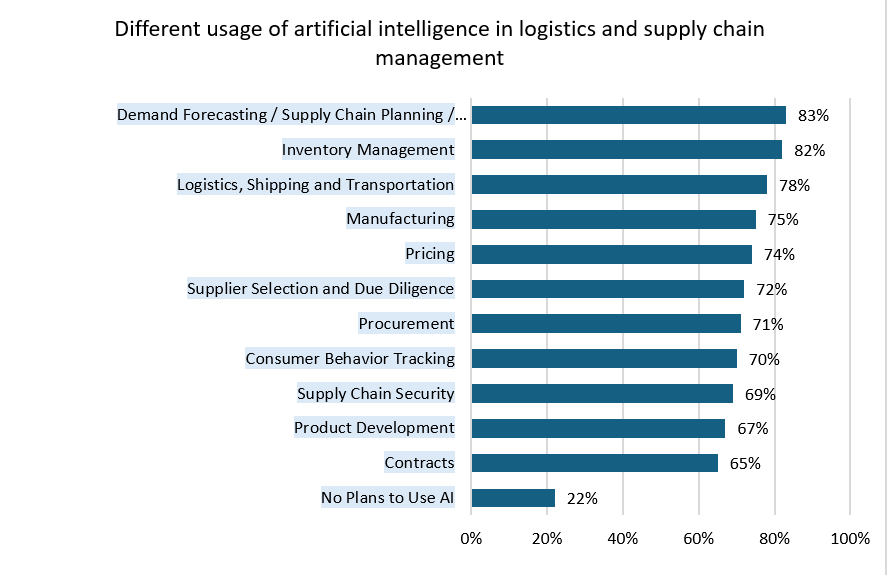

Today, businesses are using AI to work smarter, reduce expenses, and improve accuracy. From retail to healthcare, AI has brought major changes and logistics is no exception. Figure 1 depicts the impact of AI on logistics and supply chain operations. As we enter the era of Logistic 4.0, AI helps businesses to predict outcomes and finetune their operations. Several companies like Blue Yonder, Transmetrics, Baltic Transline etc., are using AI for logistic planning, delivery route optimization, and real time tracking. Also, technologies like machine learning, predictive analytics, and robotics have a great impact on it. A recent study by MHI & Deloitte found that 87% of organizations are already using AI for predictive analytics in supply chain management. Also, AI is helping companies to manage deliveries, warehouses and invoices more efficiently. AI-driven automation transforms invoice processing and accounts payable (AP) workflows. According to softCo, 74% of AP departments are now using AI for invoice processing and analytics. But as predictive models become more central to financial decision-making, a critical question comes up: Are these algorithms truly fair?

Figure 1: Diagram for AI applications in logistic and supply chain management (source: The 2025 MHI Annual Industry Report) Sources of bias in invoice-processing-machine-learning systems:

Sources of bias in invoice-processing-machine-learning systems:

ML algorithms have become deeply associated with our daily lives. Unlike humans, such algorithms are also susceptible to biases which lead to unfair outcomes. In decision-making contexts, fairness refers to the absence of prejudices towards an individual or a group based on their inherent or acquired traits. Consequently, an algorithm is considered unfair when its decisions unexpectedly favor a particular group. Unfairness in invoice processing can arise from various sources. For invoice processing, now-a-days, optical character recognition (OCR), natural language processing (NLP), and robotic process automation (RPA) are used to automate invoice validation and shipment scheduling The OCR models are mostly trained on the invoices generated in English languages which introduces bias in training data. As a result of that the models sometimes fail to extract data from the invoices with regional languages. ML models like Convolutional Neural Network (CNN), Transformer learn patterns from invoice input data and understand context in invoice. Sometimes ML models struggle with the non-standard invoice format that leads to unfair rejection or delays in smaller businesses. Invoice layout differences also may lead to errors in processing. Also, vendor discrimination (like new, small or established) incomplete information, currency sensitivity and the use of sensitive or irrelevant features may have influences on the system performance. ML models unintentionally favor certain vendors, regions or uncommon currencies and lead to expected bias in the ML models.

The challenges of fairness in AI systems can be broadly understood through two key lenses: data and algorithms. Biases in data—like measurement, omitted variables, and sampling—can lead to unfair or inaccurate outcomes in invoice processing. On the other hand, algorithmic bias can arise from design choices like optimization methods or how models treat different vendor groups, even when the input data is unbiased. User interaction bias may occur if the system interface influences how users respond to invoices, leading to skewed behavior. Popularity bias can cause frequently used vendors or invoice types to be favored, regardless of actual urgency.\

A logistics company, iTech utilizes AI to handle thousands of freights invoices every day. Their job was to make proper invoices that clients were being charged correctly. As the company grew and took on more clients, their manual invoice processing system couldn’t provide satisfactory performance. While they were manually processing 60,000 freight invoices daily, each with around 42-line items, manual entry caused 12% error rate and 3to 5-day processing time. They opted to integrate a custom OCR model with ML algorithms, and the error rate was reduced by 3%. However, the system faced challenges in the fields of high-volume data processing, data extraction accuracy issues and inefficient invoice classification problems. Another global logistics company, DHL, automated the complete procure-to-pay process across more than 100 countries. In this case, AI and RPA were integrated to automate invoice recognition and matching process. The system achieved 100% invoice recognition across multiple ERP systems. It was able to reduce manual workloads and improve global KPI reporting. In recent years, several companies tried to make use of the large language models (LLMs) in combination with OCR system to get more accurate results in the invoice processing system. In every case there is a possibility to get introduced bias in machine learning algorithms.

Approaches to reduce bias in invoice processing

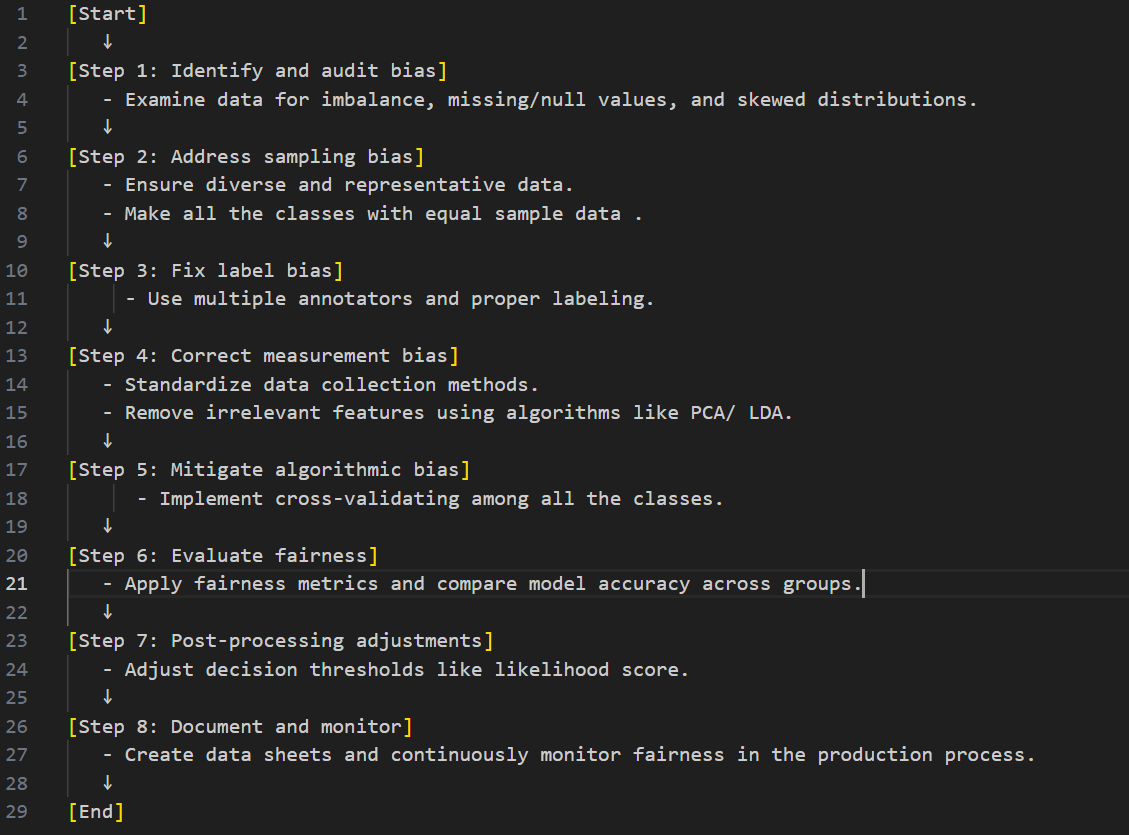

It is essential to understand the type of bias before removing it. Bias may be of sampling bias, label bias, measurement bias or algorithmic bias.

Figure 3: Steps to reduce bias in ML-based invoice processing

Today’s logistics operations involve lots of activities which require rapid decision-making and real-time problem-solving. Therefore, businesses are adopting smarter technologies to stay competitive. For example, distribution centers of Amazon use AI-powered robots to move goods efficiently, while machine learning algorithms predict demand and optimize stock levels. Here delivery routes are planned by using real-time traffic data. It ensures faster and more reliable shipping. Automation is becoming more widespread, especially in areas like invoice processing and warehouse management. It is crucial to ensure these systems are not only efficient but also fair. As an example, if an AI system prioritizes deliveries based on zip codes, it might unintentionally favor certain regions over others that reflect existing social biases. To address this issue, companies are implementing fairness checks, bias audits, and transparent monitoring systems. As an example, DHL has started using AI models that are regularly reviewed to ensure fair treatment across different customer segments and geographies. This shift toward becoming smarter, more responsible, technology and ethics go hand in hand to create more transparent and fair systems. Now a days, fairness is a basic requirement for every case. Building a responsible system, businesses able to create more reliable, inclusive, and eligible logistics solutions.

Key Takeaways

In this context, we highlighted key challenges that can negatively impact invoice processing systems when powered by AI, particularly due to bias and unfairness in data and algorithms. Bias in machine learning can quietly creep into invoice processing systems, often through uneven training data or flawed assumptions. This can lead to errors like misidentifying vendors, misclassifying invoices, or even delaying payments unfairly. For organizations, these issues aren’t just technical, it affects relationships, compliance, and trust. Researchers have explored various definitions of fairness and developed methods to detect and reduce bias across machine learning, deep learning, and NLP. By actively working on it, companies can make their invoice automation smarter, more consistent, and fairer. As a result— fewer errors, smoother operations, and a system that treats every invoice—and every vendor—with the same level of accuracy and respect.