Artificial intelligence (AI) is rapidly reshaping the quality engineering (QE) landscape, delivering intelligent automation, predictive insights, and enhanced decision-making capabilities. However, as organizations integrate AI systems more deeply into business processes and user experiences, critical questions arise about fairness, transparency, and ethics.

In the era of Generative and Agentic AI, trust is no longer a differentiator, it is a necessity. Ethical AI is not merely a technical challenge; it is a strategic imperative that directly impacts brand reputation, regulatory compliance, and user satisfaction.

Why Ethical AI Matters in QE

AI learns from past data, which can carry forward the biases already present in society. Without deliberate checks, these biases can manifest in real-world outcomes, sometimes subtly, at others overtly with harmful repercussions. For QE teams, validating AI systems extend beyond functional propriety. They must ensure that models behave responsibly across diverse user groups and scenarios.

Here are some real-world examples that demonstrate how bias can infiltrate AI systems and affect outcomes:

- Credit scoring algorithms offering lower limits to women despite having financial profiles like those of men

- Hiring tools penalizing resumes containing gendered language

- Facial recognition systems misidentifying darker-skinned individuals more frequently

These are not just technical flaws; they are ethical failures that underscore the need for bias-aware quality engineering.

Embedding Ethics into AI Validation

At Infosys, ethical AI is a foundational principle. Our QE practices integrate the three core pillars of Responsible AI, fairness, transparency, and explainability, into every phase of the AI lifecycle. This approach ensures that ethical considerations are a core design requirement rather than an afterthought.

We operate ethical AI in QE through four key practices:

- Bias-aware test design: Simulating diverse personas across geography, gender, language, and accessibility to ensure fairness under real-world conditions

- Disaggregated validation: Analyzing performance across protected groups to uncover hidden disparities that aggregated metrics might overlook

- Integrated fairness tooling: Using open‑source tools like IBM AI Fairness 360(AIF360), Microsoft Fairlearn, and Google’s What‑If Tool, along with explainability methods such as Shapley Additive Explanations (SHAP) and Local Interpretable Model-Agnostic Explanations (LIME), helps assess and explain fairness in AI modelss

- Continuous monitoring: Tracking ethical key performance indicators (KPIs) in production through fairness dashboards, triggering automated retraining and governance reviews upon breach

Success Story: Addressing Hidden Bias in AI Deployment

While deploying an AI-powered customer portal for a global scientific equipment provider, Infosys QE teams uncovered a hidden bias. Although initial performance metrics appeared strong, customer feedback revealed dissatisfaction among smaller labs and non-Western users. A data audit exposed a training bias favoring large, Western enterprises.

To address this issue, the QE team implemented the following measures:

- Conducting persona-based testing for underrepresented users

- Applying disaggregated analytics to identify performance gaps

- Defining fairness metrics to measure satisfaction and resolution disparities

The initiative successfully shifted focus from feature completeness to ethical fitness, demonstrating how bias can pose tangible business risks.

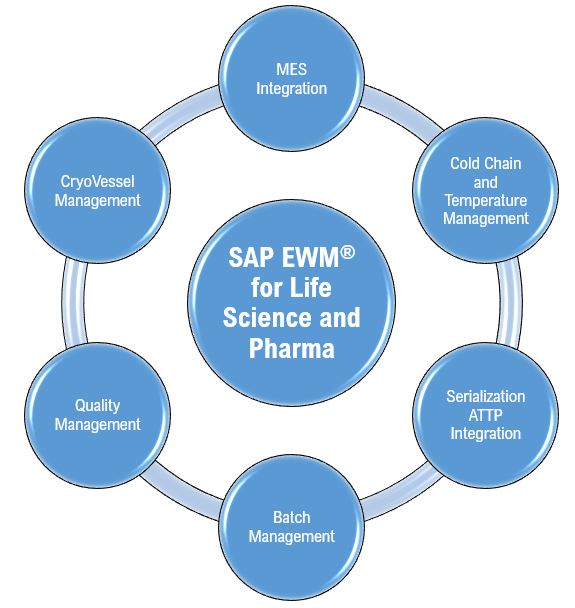

Integrating Technology and Governance

Infosys embeds Responsible AI governance directly into its delivery frameworks to ensure transparency and maintain ethical integrity:

- PolarisEdge is an AI platform that helps organizations get more value from their digital investments by connecting people, processes, data, and technology

- Infosys Topaz, our AI-first suite, follows the Scan, Shield, and Steer framework to embed ethical integrity throughout the AI lifecycle:

- Scan for fairness risks and potential bias

- Shield models with privacy and compliance safeguards

- Steer outcomes through continuous oversight and traceability

The Infosys AI Assurance Framework

AI-infused applications (AiA) introduce a new set of challenges that extend far beyond traditional software systems. To strategically address these complexities, Infosys leverages the strategic AiA=(BR)² framework. This comprehensive approach integrates Benchmarking and Business Assurance with Red Teaming and Responsible AI practices. While benchmarking ensures performance against industry standards, business assurance aligns AI outcomes with organization goals. Red teaming tests systems for security lapses and vulnerabilities, while responsible AI practices safeguard ethics and fairness. The resulting combination ensures that AI systems are not only high performing but also secure, ethical, and fully aligned with core business objectives.

Conclusion

Quality engineering must evolve to validate not just what AI systems accomplish, but how and why they reach those outcomes. At Infosys, we have redefined QE to include ethical validation as a core responsibility. With platforms like Infosys Topaz, Infosys AI Assurance Platform, and Polaris, we actively ensure that AI systems are understandable, fair, and ultimately worthy of trust.

As ethical AI becomes central to environmental, social, and governance (ESG) goals as well as global regulatory frameworks, QE teams must lead the charge, not just in building reliable systems, but in building responsible ones.