In today’s data-driven world, traditional databases struggle to efficiently handle the growing volume of unstructured and complex data like images, videos, and text. This is where Vector Databases (Vector DBs) come into play.

Vector databases are specialized databases designed to efficiently store and retrieve vector embeddings. These embeddings, which represent data like text, images, and videos as numerical vectors, are crucial for tasks like:

- Finding similar items (e.g., products, movies) for users.

- Identifying the closest matches for a given input (e.g., image search).

- Understanding and responding to user queries more effectively.

Key features of vector databases include:

- Quickly finding the most similar vectors to a given query.

- Standard database operations (Create, Read, Update, Delete) for managing vector data.

- Combining vector search with traditional filtering based on metadata.

- Easily scaling the database to handle growing data volumes.

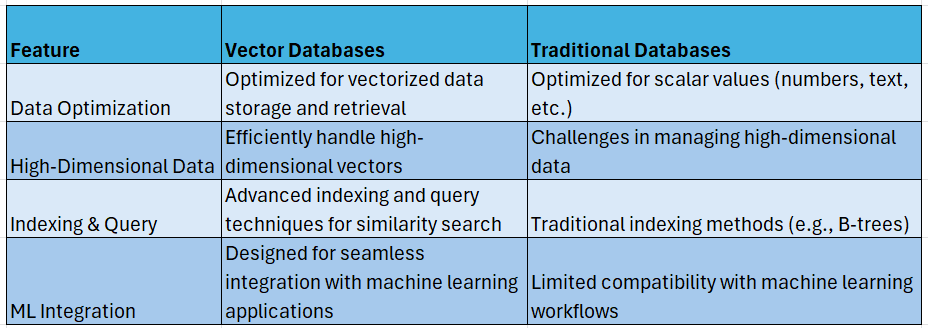

Vector DB vs Traditional DB

The Underlying Technology

Instead of relying on traditional indexing methods (like keyword searches), Vector DBs utilize techniques like:

- k-Nearest Neighbors (k-NN): This algorithm finds the “k” closest vectors to a given query vector in the database.

- Approximate Nearest Neighbor (ANN) search: Faster, approximate versions of k-NN that trade perfect accuracy for speed.

To illustrate this concept, let’s consider an AI chatbot application developed for a specific client. The client faced a significant challenge: their critical business data was scattered across numerous systems. This fragmented data landscape made it extremely difficult for employees to access accurate and timely information.

Often, employees encountered inconsistent search results, received links from various systems, and were forced to navigate through multiple files and systems to find the information they needed. This not only consumed valuable time but also increased the risk of errors and inconsistencies.

To address this data fragmentation issue, a centralized knowledge base was created. This knowledge base serves as a single, unified source of truth, consolidating data from all relevant systems into a single, easily accessible location.

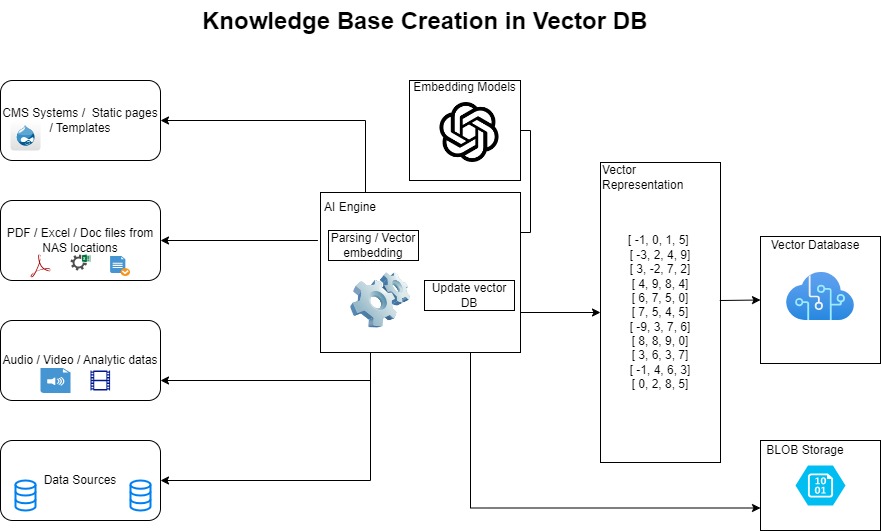

Knowledge Base Creation

This solution begins with the creation of the system of record database, which serves as the core source of this AI chatbot solution. Unstructured data, content like text, images, audio, and video, will be fetched from various data sources through the diligent work of offline batch jobs. An Azure function written using python scripts acts as the conductor, orchestrating the retrieval of all content from these diverse sources.

Once the content is successfully retrieved, the AI engine steps in, utilizing its form recognizer prowess to transcribe and extract the essence from audio and video files. It then dissects the text files into manageable chunks and crafts tokens from them. Finally, the AI engine leverages the power of OpenAI’s embedding service to generate vector embeddings for these tokens and file/content reference to the vector embeddings meticulously storing them within the vector database, ready for future use. The associated files for the respective vector embedding will be stored in BLOB storage.

Data retrieval

The rise of powerful LLMs like GPT-4 has revolutionized how we interact with data. By integrating LLMs with Vector Databases, we can empower users to query their data using natural language, seamlessly blending the user experience with clean, factual information.

However, question-answering often involves more than simply retrieving data. It may require analyzing and processing the information. Therefore, incorporating an agent-based framework like LangChain between the application’s user interface and the Vector Database can significantly enhance capabilities. This framework allows for more complex interactions, enabling the LLM to not only query the data but also reason over it.

This synergy between LLMs and Vector Databases arises from their shared reliance on embeddings. Vector Databases store data as embeddings, while LLMs encode knowledge within their own internal embeddings. This shared representation facilitates direct querying of the data within the embedding space, effectively transforming the Vector Database into a knowledge base accessible to the LLM.

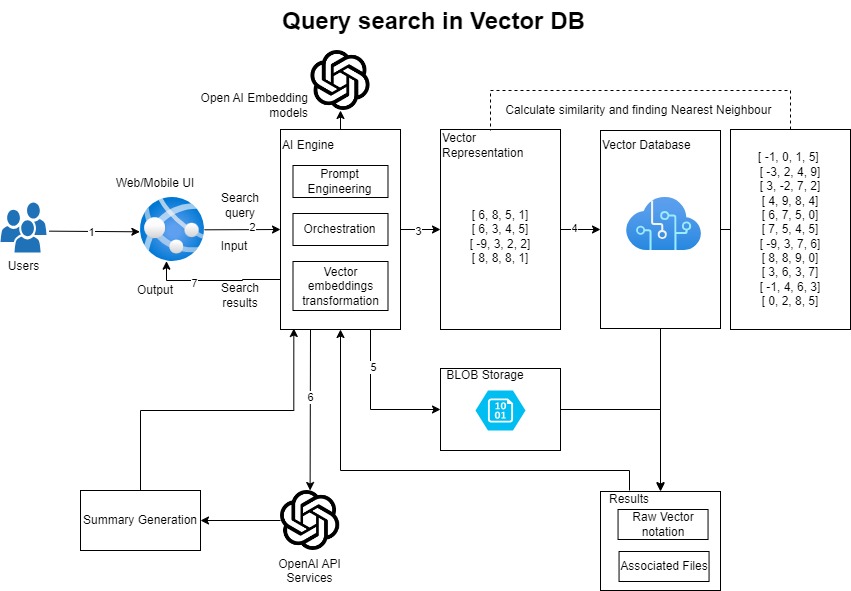

1) A user accesses the AI chatbot application via a web browser and enters a search query into the chat window.

2) The AI engine, developed using Python, receives the user’s search query. It then processes and formats the query with an appropriate prompt for the LLM.

3) The AI engine utilizes OpenAI’s text embedding models to convert the user’s search query into a vector representation.

4) The AI engine sends this vector to the Vector Database and performs a nearest neighbor search to find similar vectors within the database. Along with the similar vectors, it also retrieves the corresponding file reference IDs.

5) AI engine utilizes the retrieved file reference IDs to access and retrieve the associated files from storage.

6) The AI engine then sends the user’s search query (in vector form), the retrieved result vectors, and the associated files to OpenAI API services. The LLM analyzes these inputs and generates a detailed summary.

7) The user interface (UI) displays the summary generated by the OpenAI LLM on the page. Additionally, it presents links to the relevant files for further user exploration.

In conclusion, this use case demonstrates the power of Vector Databases in revolutionizing how organizations access and interact with their data.

As AI and data technologies continue to evolve, Vector Databases will play an increasingly critical role in helping organizations harness the full potential of their information assets.

In future blog posts, will provide more in-depth discussions of various use cases.