Introduction

Level 5 autonomous cars are operational without human intervention and integrated digital ecosystems assume full responsibility for navigation, passenger safety, and the overall experience of passengers. The terabytes of data generated by these vehicles’ multimodal sensors must be consumed, processed, and stored. Included in this data set are telemetry logs, high-definition video, LiDAR point clouds, and radar echoes. Deterministic latencies smaller than 10 ms are required for critical operations like emergency braking and collision avoidance. Simultaneously, the in-vehicle infotainment (IVI) subsystem must provide the vehicle with high-definition video, immersive audio, and uninterrupted connectivity.

Digital Infrastructure Requirements

Three core digital capabilities have been established to enable fully autonomous operation:

1. Scalable Big-Data Management

This is achievable with a tiered storage hierarchy that includes in-vehicle flash arrays, edge-site caches, and cloud data lakes for multi modal data storage, . This hierarchy allows for policy-driven retention and quick data retrieval. Data stores built into sensors index metadata, and distributed message queues (like Apache Kafka) guarantee the reliable and organized dissemination of events.

2. Deterministic Low-Latency Control

Low-Latency Deterministic Control is guaranteed by real-time operating systems (RTOS) that incorporate hardware-accelerated Time-Sensitive Networking (TSN) Ethernet, pre-emptive scheduling, and in-hardware interrupt handling. The safety-critical control loops for steering and braking are executed on microcontrollers that are isolated within mixed-criticality partitions.

3. Adaptive Compute Allocation

Hardware virtualization frameworks enable Adaptive Compute Allocation by allocating CPU cores, accelerators, and memory according to the importance of individual tasks. In order to meet real-time priorities while optimizing energy consumption, on-chip monitors dynamically adjust voltage states and clock frequencies.

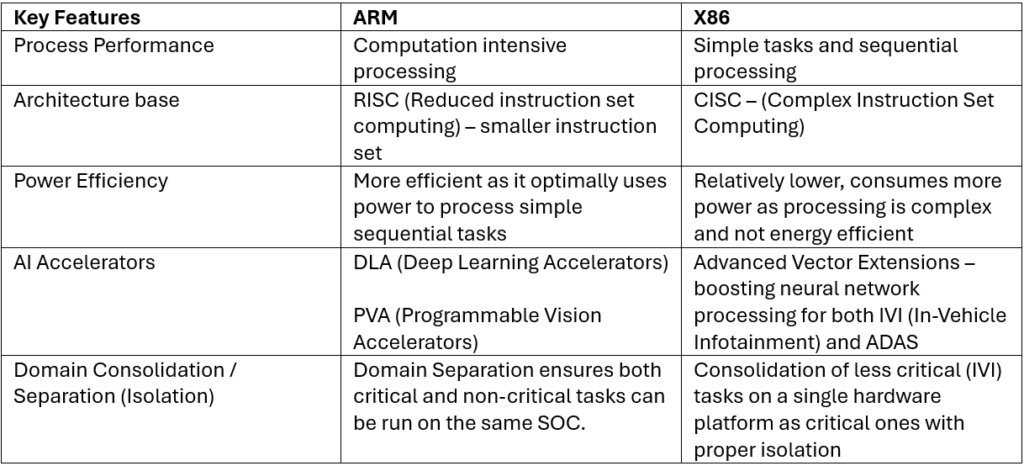

Architectural Landscape and SoC Design

Based on heterogeneous chip configurations derived from x86 and ARM foundations, the architectural ecosystem supporting autonomous vehicles has evolved. Critical functions, like ADAS and drive-by-wire control, can run in their own dedicated partitions thanks to domain separation techniques, while non-critical tasks, like infotainment, can share resources via virtualization without any interference. To implement these limits, hardware virtualization, temporal partitioning, and memory protection units (MPUs) are used.

This consolidation reduces system complexity, power consumption, and hardware costs by 15–20 %.

Advanced SoC designs further incorporate:

1. Resource Partitioning: Shared CPU cores, memory, and I/O resources are allocated via hardware virtualization based on task criticality, ensuring predictable performance and preventing resource contention.

2. Specialized Communication Protocols: High-integrity interconnects (e.g., automotive Ethernet with Time-Sensitive Networking, CAN-FD) and on-chip message fabrics are employed to guarantee reliable, low-latency data exchanges between functional domains.

Edge–Cloud Compute Paradigm

The needs for both large-scale analytics and low-latency decision-making have led to the adoption of a hybrid architecture. Containerized perception pipelines and inference engines are managed by lightweight distributions for rapid failover on edge compute nodes, which can be located inside the vehicle or at roadside units nearby. To ensure response times under 10 ms and reduce reliance on the network, these nodes process algorithms for lane keeping, emergency response, and collision avoidance locally.

Anonymized fleet information for use in training machine learning models, managing the fleet, and distributing software over-the-air are managed via cloud services support for petabyte-scale storage and neural-network retraining is provided by distributed file systems (HDFS, S3) and GPU clusters (e.g., NVIDIA A100, Google TPU). Offloading non-critical tasks to the cloud allows for better bandwidth utilization and operational resilience in areas with limited connectivity. Examples of such tasks include map updates, behavioural analytics, and user-personalization models.

In-Vehicle Infotainment (IVI) Systems

IVI systems have evolved into digital cockpits that are fully integrated and can display augmented reality content and 3D navigation overlays on head-up displays. Multi-domain virtualization allows for the simultaneous execution of various applications, including navigation, media streaming, productivity software, and passenger-specific features. Machine learning-powered human machine interaction (HMI) modules and natural language processing engines create multi-sensory user interfaces that are both simple and powerful.

Numerous high-resolution displays can be supported by the fast interfaces, which include PCIe Gen4, HDMI 2.1, and USB‑C. Integrated 5G and Wi‑Fi 6E connectivity ensure that streaming services are always accessible. Using end-to-end encryption, secure over-the-air (OTA) update frameworks oversee software releases and digital certificate provisioning for an entire fleet of vehicles.

OEM–Chip Supplier Collaborations

Partnerships between automotive OEMs and semiconductor providers have been established to align ADAS and IVI development roadmaps. Notable collaborations include:

1. Volkswagen and Mobileye/NXP: Deployment of EyeQ6H/L and S32G3 (ARM/RISC‑V) enables L1–L4 ADAS features—Travel Assist and Emergency Assist—augmented by MIB4 AR displays, 5G OTA updates, V2X Car‑to‑X coordination, and predictive maintenance.

2. Sony Honda Mobility (Afeela) and Qualcomm/Sony: Implementation of Snapdragon Digital Chassis and Sony IMX sensors (ARM) targets L2+/L3 autonomy by 2026, integrating Unreal Engine–based 3D mapping, AI-driven virtual agents, and secure 5G V2X connectivity.

3. Geely (Zeekr) and Intel: Utilization of AI-enhanced SDV SoC (x86) supports driver monitoring, adaptive cruise control, generative-AI IVI assistants, and AI-driven energy management.

4. BMW and Mobileye/Qualcomm: Integration of EyeQ6H/L and Snapdragon Ride Flex (ARM/RISC‑V) SoCs delivers L2++ SuperVision, highway pilot functionality, pillar‑to‑pillar display systems, and predictive diagnostics over 5G V2X.

5. Tesla and AMD: Incorporation of Versal AI Edge XA and Ryzen V2000A (ARM+x86) SoCs provides L2+/L3 in-cabin monitoring, automated parking, multi-display digital clusters, and a PC-like infotainment experience with integrated gaming capabilities.

These alliances emphasize:

1. Centralized zonal architectures for reduced wiring complexity and improved serviceability.

2. Mixed-criticality SoC consolidation to achieve hardware cost reductions of up to 20% by consolidating ADAS and IVI modules.

3. AI-driven feature enhancements resulting in approximately 30% reductions in accident rates.

Conclusion

Scaling up L4 autonomy in geofenced ride-hailing services and consumer EVs is expected to happen between 2027 and 2030, with the introduction of sub-3 nm chiplets, ARM/RISC-V hybrid system on chips, and dynamic power-scaling technologies also present. Manufacturers like Geely, Ford, Stellantis, and Honda are anticipated to adopt these advancements, with cybersecurity improvements anticipated to lessen system vulnerability exposure by 40%. Virtual reality (VR) integrated passenger environments and advanced hardware architectures, such as neuromorphic systems on a chip (SoC) from Renesas, quantum-enhanced designs from Qualcomm, 3D network functions and RISC-V frameworks from NVIDIA, and quantum accelerators from Intel, are expected to enable full L5 autonomy starting in 2031. These technologies are anticipated to deliver range improvements of 10-15%, processing latencies of sub-microseconds, and zero-trust security compliance with international automotive standards.